The first time I explored Walrus Protocol, I realized something that most people still overlook: Walrus is not simply a storage layer. It is building a system where data behaves like an economic asset, not a technical output. If blockchains created digital money and DePIN networks created digital services, Walrus is creating something deeper — a stable, scalable data economy where supply, distribution, and reliability follow economic logic rather than traditional network scaling limits. We’ve spent years trying to store data more cheaply, redundantly, and efficiently. Walrus takes a different approach. It treats data as the foundation of a new economic system — one where incentive structures, protocol guarantees, redundancy strength, and availability behave like macroeconomic pillars, not just engineering problems.

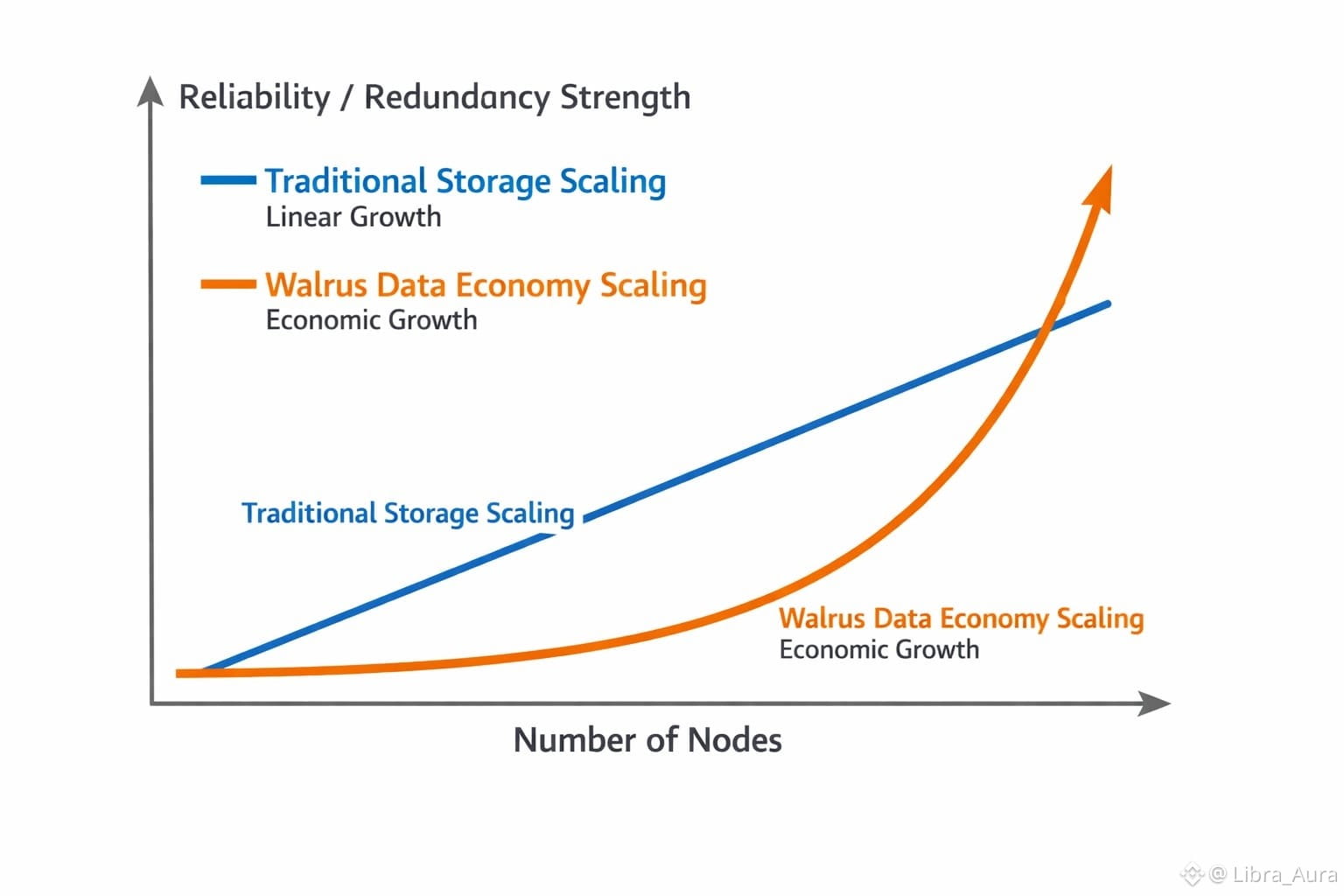

In traditional storage networks, scale works linearly. Add more nodes → gain more capacity → gain more replication. But economic systems don’t scale linearly — they scale organically, driven by demand, liquidity, supply pressure, and risk balancing. Walrus mirrors this behavior by using erasure-coded data economics, where reliability emerges from distribution rules, not from brute-force replication. Instead of simply storing files, Walrus distributes the data into fragments that operate like “units of economic security.” No single node holds the full picture, yet the system as a whole guarantees perfect reconstruction. This dynamic makes Walrus data behave like a diversified portfolio rather than a single point of failure. That’s not just storage innovation — that is economic engineering applied to digital assets.

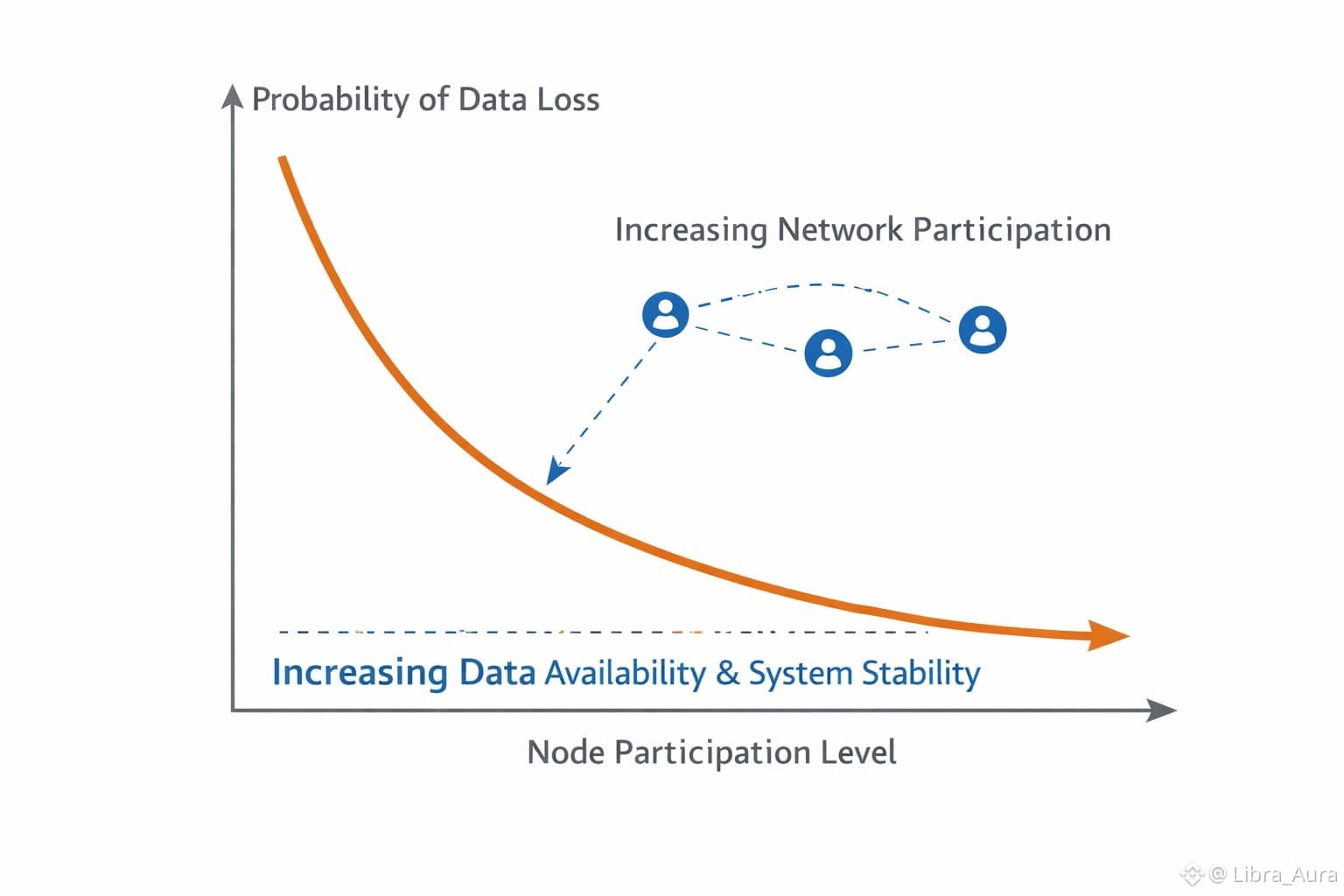

The biggest insight behind Walrus is that the internet is evolving from a network of servers to a network of economic actors. AI agents request data, chains validate proofs, decentralized applications reference external states, and autonomous compute systems rely on continuous data ingestion. In this world, reliability is not a convenience — it is an economic requirement. Walrus solves this by designing a system where reliability increases as participation grows. This is the opposite of traditional architectures, where scale introduces bottlenecks. In Walrus, scale introduces economic stability: more nodes → more fragments → higher redundancy → higher availability → lower marginal cost.

One of the most underrated elements of Walrus is its shift away from “storage renting” and toward storage permanence as a public good. Instead of pricing based on how long data stays on a disk, Walrus prices based on availability guarantees, meaning users pay for the system’s ability to reconstruct data, not for idle capacity. It’s similar to how financial systems price risk — not assets. The result is an infrastructure where the protocol self-balances supply and demand through its cryptoeconomic design. When more data is added, the system redistributes load. When nodes enter, redundancy strengthens. When nodes exit, erasure coding compensates. This is not random behavior — this is economic equilibrium designed into the protocol.

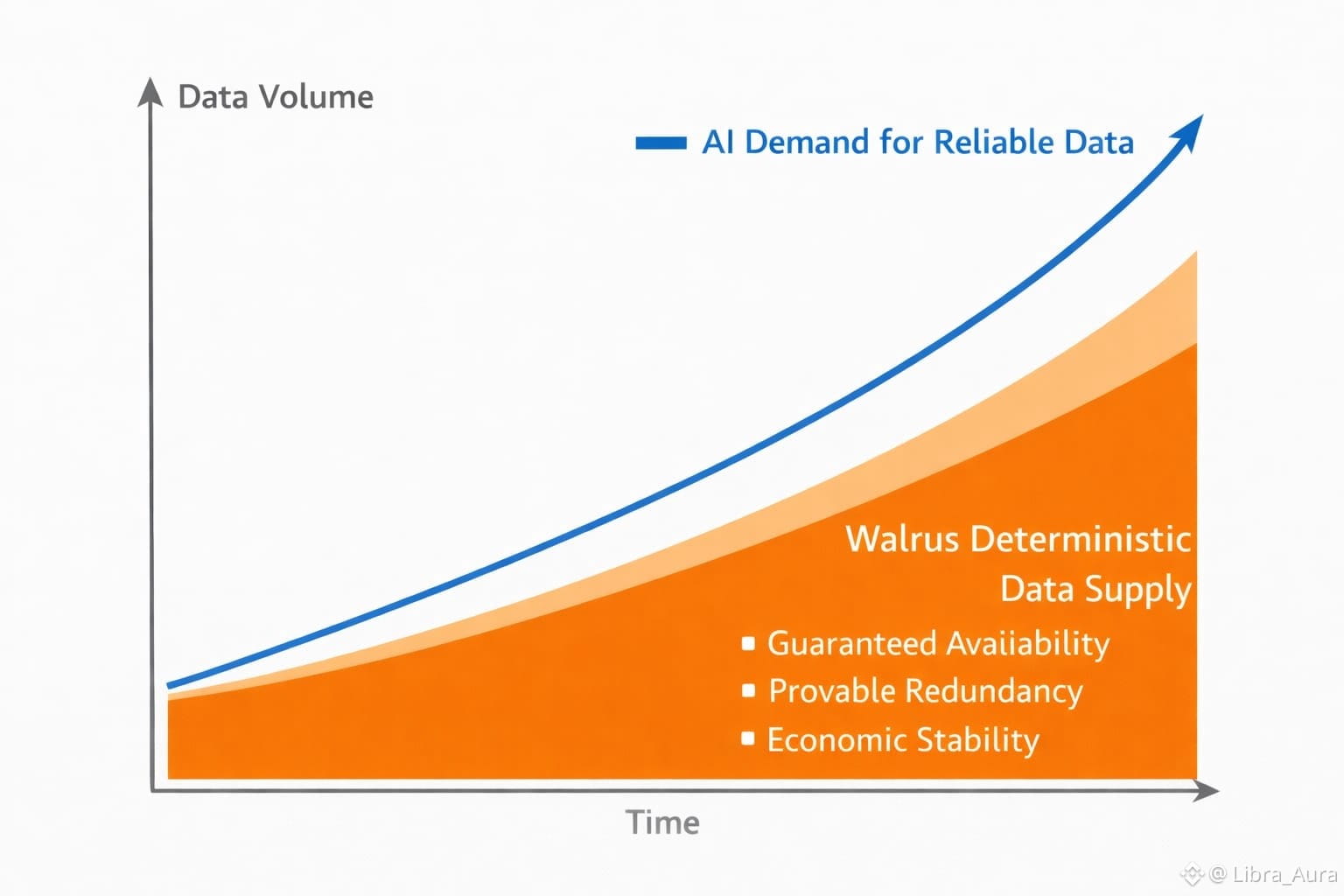

As AI systems grow, so does their demand for reliable data sources. AI cannot tolerate corrupted datasets, partial files, or missing fragments. Human users might retry a failed upload; machine economies will not. Walrus becomes a crucial economic layer because its design removes volatility from data availability. Every piece of data becomes a verifiably recoverable asset, guaranteed by math, not trust. In a world where AI agents will buy, trade, request, and process trillions of data points per day, this level of determinism is not optional — it’s essential. Walrus isn’t just supporting AI workflows; it’s building the monetary logic of AI data markets, where availability becomes predictable and cost becomes programmable.

What makes Walrus structurally different is that it treats distribution as a scalable economic resource instead of a limitation. Most networks degrade as they expand — latency increases, coordination becomes harder, and reliability flattens. Walrus flips that model by designing an economic structure where each additional node adds to redundancy strength rather than complexity. Economies grow when participation grows; Walrus applies that same principle to data durability. The protocol ensures that no single region, node, or actor can compromise availability because the fragments are spread across a vast distributed market of independent nodes, each contributing to systemic solvency.

The more I study Walrus, the clearer the pattern becomes: this is not a better Filecoin or a faster Arweave. It is an economic system, not a storage marketplace. Walrus is building the foundational infrastructure that future chains — especially AI, gaming, social, and real-time computation — will rely on for predictable, permanent, globally distributed data. These industries cannot operate on latency-prone, replication-heavy, or trust-dependent systems. They need infrastructure where reliability behaves like an economic invariant. And Walrus is the only system treating data availability with the same rigor that monetary protocols treat solvency.

In the coming decade, the internet will shift toward stateful, intelligent, autonomous agents. These agents will require economic-grade guarantees for any resource they depend on — compute, storage, bandwidth, identity, and data. Walrus is positioning itself as the data availability backbone for this new era. It doesn’t just store bits; it architecturally ensures that those bits behave like economically protected assets with deterministic recoverability. That design philosophy sets Walrus apart and pushes it into a category of its own.

If blockchains gave us programmable money, then Walrus is giving us programmable data economies — systems where data has provable value, provable redundancy, and provable permanence. The future won’t be shaped by which storage chain is cheaper; it will be shaped by which system treats data as an economic primitive. And Walrus is the first protocol that truly understands this shift.