Yesterday I went to the Ukrposhta branch to pick up a package. A usual matter. But the line wasn’t moving.

In front stood an elderly woman - she was confused about something. She was asking. The tired operator monotonously replied: "yes", "no", "yes"... Without any explanation. One minute stretched into twenty. People in line were getting anxious. And I stood there thinking: here it is. Not a lack of information - a lack of explanation.

The same is happening with AI.

Do you remember when ChatGPT gave a completely confident answer - and then it turned out to be a total fabrication? So that when an AI agent recommended something, you didn't understand why?

Without traceability (or transparency of logic) it doesn’t make sense. It’s just a simulation.

Corporate clients, government regulators, all serious users will never trust a black box. They need every step of the logic, sources, intermediate conclusions - verified, with the ability to audit. Most AI solutions still do not provide this.

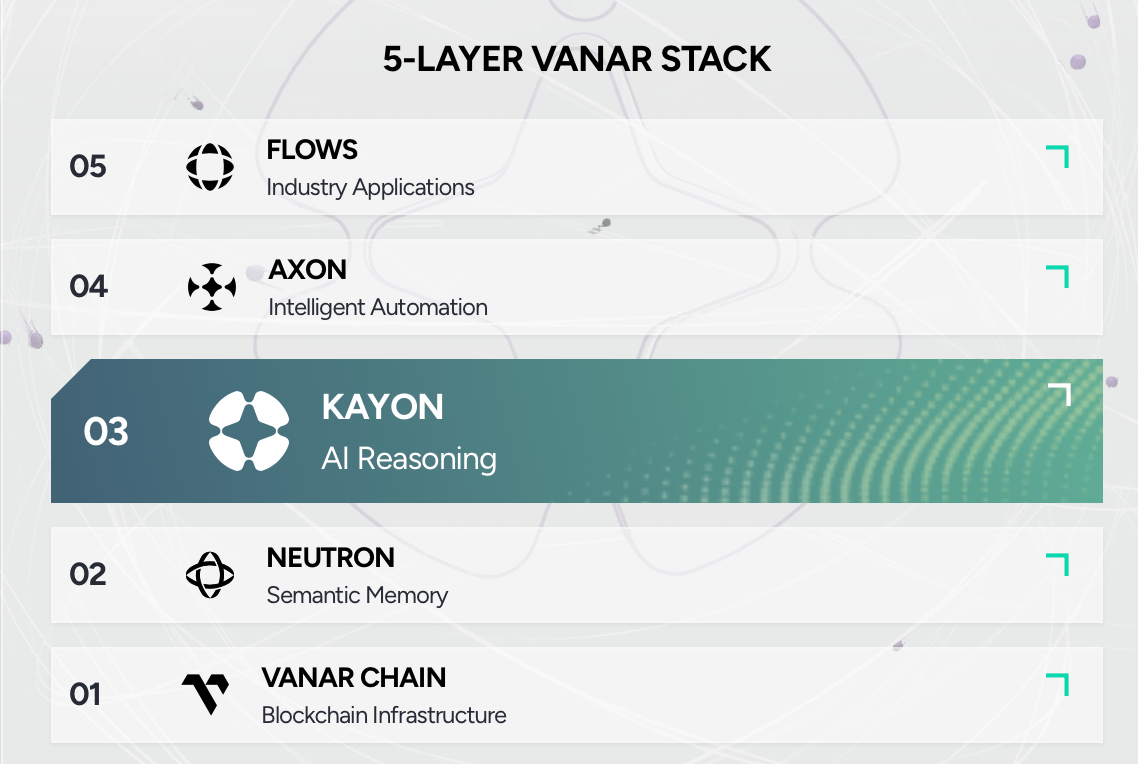

And here I thought of a good example of another approach - Kayon from @Vanarchain

Kayon is a reasoning engine (or, though it’s a bit academic, a thinking engine), meaning a system that not only provides an answer but shows the entire thought process.

Kayon is built on top of Neutron, the semantic memory layer of Vanar. It takes context from Seeds - not just text, but connections and meanings stored in myNeutron. It integrates external data: oracles, APIs, on-chain events, RWA metadata. It performs multi-layer reasoning (chain-of-thought, free-of-thought, self-critique) and all of this on-chain.

But that’s not the main point:

The main thing is what it outputs. Not just the final answer. A complete trace: what sources, what intermediate conclusions, why alternatives were rejected, confidence score. Everything is recorded on the blockchain - immutable, with references to each step. It can be verified at any time.

Now for the specifics - because "reasoning engine" sounds abstract until you see real queries.

A manager might ask: "What recurring themes are in our clients' feedback?" or "What factors influenced the change in our pricing this year?" - and receive not just an answer, but a complete trace of the logic with sources.

The Sales team - "What objections most often arise in the pipeline?" or "What proposals led to successful closures?" Product and Engineering teams - "How do user queries relate to the technical roadmap?" or "How did users react to the latest release?"

And separately - compliance. In regulated industries, Kayon together with Neutron provides immutable audit trails for key decisions, proof of authorship, access history, and versioning. This is not marketing - this is what the corporate sector really demands from AI solutions today.

I just reread what I wrote and realized that something still bothers me about it, and then I remembered. When preparing this article, I "dug through" many press releases. I encountered promises many times that "... we have AI-reasoning", but in reality under the hood - just a prompt to an external LLM without any on-chain trace. In contrast, Kayon is a complete and working architectural solution, not a marketing wrapper.

Ordinary AI - an oracle that delivers a prophecy and falls silent.

Kayon - a scientist who writes a peer-reviewed article: methodology, data, conclusions, everything is open.

Just like in that queue at Ukrposhta - one simple explanation would change everything. $VANRY in this case is used for paying reasoning cycles, accessing trace and audit queries - this is how the token is integrated into the actual use of infrastructure.