AI-first infrastructure is not the breakthrough the breakthrough is having a neutral settlement layer that AI systems can’t avoid.

Most people miss it because they treat “AI” as the product, not as a new kind of user that needs coordination.

It changes everything for builders and users because the hard part becomes accountability: who did what, when, and what the system will accept as final.

I started paying attention to this when I watched good automation fail in boring ways: payments that “looked done” but weren’t, approvals that got reversed, and bots that behaved perfectly in a sandbox but broke the moment they touched real money or real users. Nothing was dramatic just small mismatches between what an app assumed and what the wider world actually recognized. Over time, you learn that reliability is a feature, and it’s usually the most expensive one to buy later.

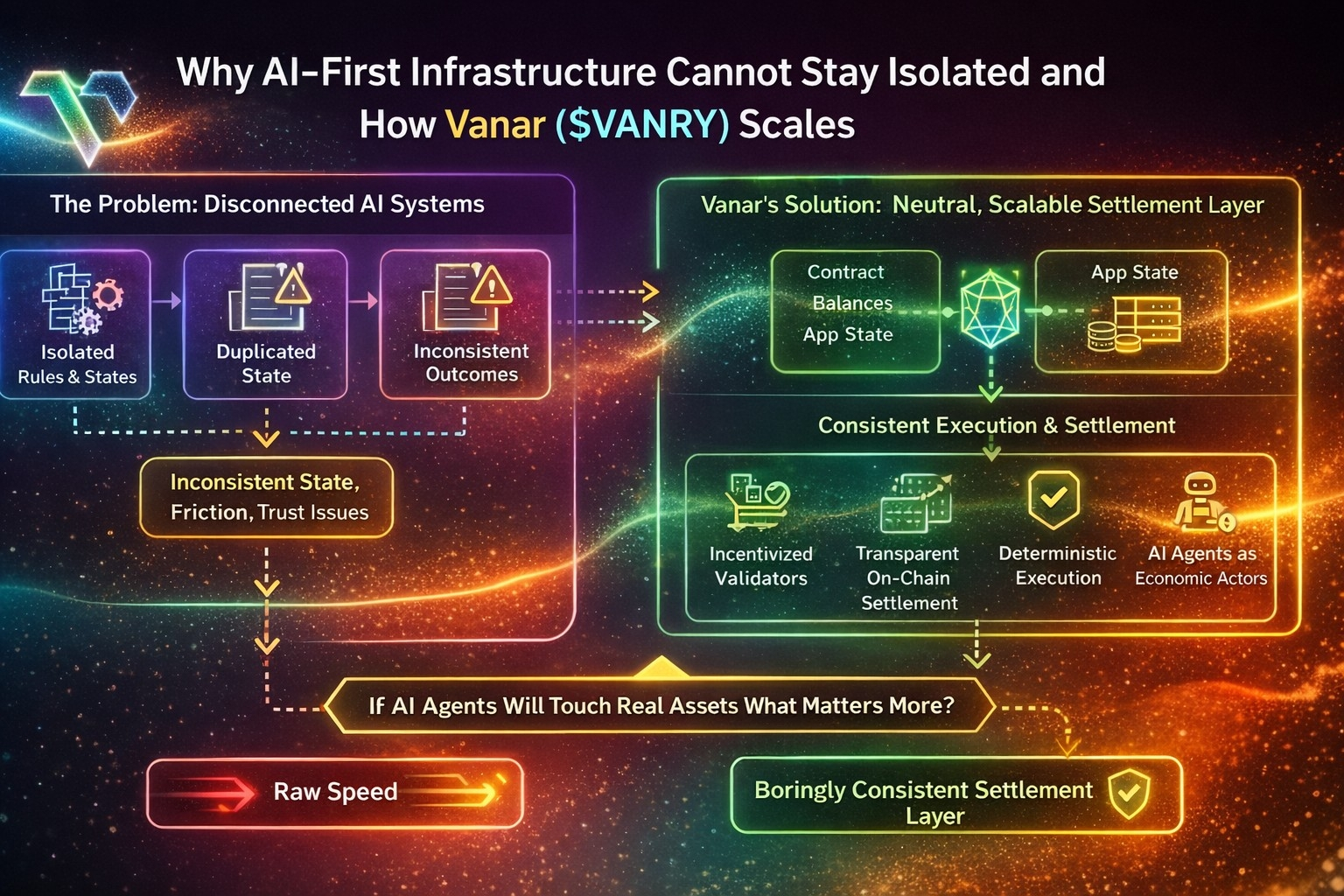

The concrete friction is simple: AI systems can’t stay isolated because their value comes from acting across many apps, wallets, games, marketplaces, and data sources. The moment an AI agent moves from “suggesting” to “executing,” it needs a place where actions are recorded, ordered, and settled in a way other systems will respect. If every app runs its own rules, you get duplicated state, inconsistent outcomes, and a constant argument about what is real: did the transfer happen, did the item mint, did the permission change, did the payout clear. That’s not an AI problem it’s an infrastructure problem that AI makes more frequent and more adversarial.

It’s like trying to run a city where every neighborhood prints its own time and calls it “official.”

Vanar’s scalable path, in this lens, is less about adding isolated AI features and more about being a consistent execution and settlement environment that other systems can plug into without renegotiating trust each time. Focus on one core idea: shared, deterministic state that turns “agent intent” into verifiable outcomes. If an AI agent is going to act on a user’s behalf, the network has to give everyone the same answer to one question: what is the current state, and what changes are valid.

Mechanically, that means a straightforward on-chain state model where accounts and contracts (or application logic) produce a single canonical ledger of balances, permissions, and application state. A transaction whether submitted by a user, a service, or an agent acting under delegated authority enters a mempool, gets ordered by validators, and is executed against the current state. Validators verify signatures and rules, run the state transition, and commit the new state once consensus finalizes the block. The key is that verification is public: anyone can check that the transition followed the protocol rules, even if they don’t trust the agent that initiated it.

Incentives are what make that promise stick on bad days. Validators stake VANRY to participate and to make misbehavior costly. Fees exist to price scarce resources block space and execution and to prevent spam, especially when “agents” can generate actions at machine speed. Governance exists to adjust the parameters that inevitably need tuning over time: fee policy, staking requirements, limits that shape throughput versus safety, and the rules around upgrades. None of this guarantees that the network is magically “safe,” but it does mean that the system has a defined way to coordinate change rather than relying on informal trust between apps.

Failure modes still matter. If validators go offline or disagree, liveness can degrade and finality can slow, which is deadly for automation that assumes instant settlement. If validators censor, some actions can be delayed even if they are valid. If an agent’s delegated permissions are too broad, the chain will faithfully execute a bad decision, because blockchains don’t know intent only authorization and rules. And if developers build external dependencies (off-chain data, bridges, custodians), those dependencies can fail while the chain remains “correct,” creating a gap between ledger truth and user experience. What is guaranteed is narrow: valid transactions, correctly authorized, will execute according to protocol rules and be recorded once final. What is not guaranteed is good judgment, good data, or good UX built on top.

Uncertainty: if AI agents become meaningful economic actors, adversaries will target the weakest coordination point validators, wallets, permissioning, or off-chain hooks and real-world security will depend as much on operational discipline as on protocol design.

If you assume AI agents will touch real assets and real identities, what do you think matters more: raw speed, or a settlement layer that’s boringly consistent under stress?