A few days ago I sat down for tea with a logistics operator who manages cross border supply chains across Asia and the Middle East. He has recently deployed AI driven customer service and automated scheduling across his company. What began as a cost saving upgrade turned into an operational crisis.

A few days ago I sat down for tea with a logistics operator who manages cross border supply chains across Asia and the Middle East. He has recently deployed AI driven customer service and automated scheduling across his company. What began as a cost saving upgrade turned into an operational crisis.

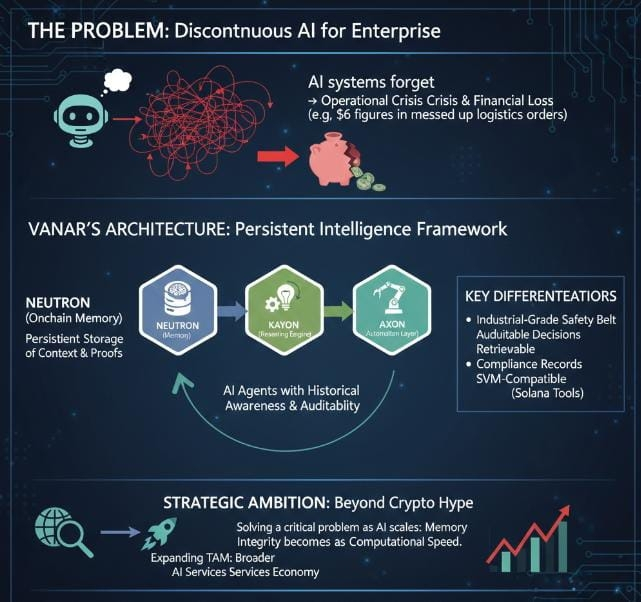

The system processed orders quickly yet it forgot context. A returning client had special handling instructions agreed the week before. The AI agent priced and routed the shipment as if it were a first time order. Containers were sent to the wrong port and invoices reflected incorrect terms. The total damage crossed six figures before humans intervened.

His frustration was not about intelligence. It was about continuity. The system could reason in the moment but it could not remember with accountability. In industrial environments discontinuous intelligence is not a bug. It is a liability.

That conversation reframed what was discussed recently on stage in Dubai. At the AIBC conference CEO Jawad Ashraf of Vanar did not focus on token metrics or throughput benchmarks. He framed a larger issue. If AI is to function as a global growth engine it must rely on memory that does not disappear between sessions or platforms.

Vanar positions its architecture around this principle. Through its onchain memory layer known as Neutron the network enables semantic compression and persistent storage of documents proofs and contextual data directly on chain. This is not simple file storage. It is structured memory that can be queried and interpreted by intelligent agents over time.

Kayon adds reasoning capabilities while Axon enables automation across workflows. Together they create a framework where AI agents can operate with historical awareness rather than isolated prompts. For enterprises this becomes an industrial grade safety belt. Decisions can be audited. Context can be retrieved. Compliance records remain accessible without dependence on external servers.

The strategic ambition is clear. Instead of competing only within the crypto narrative Vanar is expanding its total addressable market toward the broader AI services economy. Traditional enterprises do not care whether a network is labeled L1 or L2. They care whether automated systems can reduce costs without introducing catastrophic errors.

By aligning blockchain infrastructure with the reliability demands of AI adoption Vanar attempts to solve a problem that will intensify as automation scales. Memory integrity becomes as critical as computational speed. In this framing the token is not the story. The architecture is.

Markets often reward short term volatility over structural positioning. Yet if enterprises begin selecting infrastructure based on reliability and persistent intelligence then projects built around continuity rather than hype may gain durable relevance.

Vanar does not seek to be another loud voice in the speculative arena. It aims to become foundational infrastructure for accountable AI systems operating in real economic environments. If that thesis materializes the narrative shifts from price action to productivity impact. In an era where forgetting can cost millions memory becomes strategy.