Last year, I was standing outside a government office in the heat, trying to pay a document fee through my banking app. The app froze after the money was deducted. No receipt. No confirmation. Just a spinning wheel. The clerk shrugged and told me to “come tomorrow.” I had the debit alert. The system had no record. I was stuck between two truths. 😐

Last year, I was standing outside a government office in the heat, trying to pay a document fee through my banking app. The app froze after the money was deducted. No receipt. No confirmation. Just a spinning wheel. The clerk shrugged and told me to “come tomorrow.” I had the debit alert. The system had no record. I was stuck between two truths. 😐

That moment felt small, but it exposed something bigger. The money moved. The system did not agree. The data existed. The institution did not trust it. I wasn’t dealing with a technical glitch. I was dealing with fragmented authority — multiple databases pretending to be one reality.

We talk about speed like it’s the ultimate solution. Faster internet. Faster payment rails. Faster blockchains. But what I experienced wasn’t a speed problem. It was a coordination problem. The left hand of the system did not understand what the right hand had already done.

The modern digital economy is like a city where every building has its own clock. Some are ahead. Some are behind. They all claim to tell time. None of them synchronize. 🕰️

If you zoom out, that fragmentation explains why transactions fail, why settlement takes days, and why institutions still rely on reconciliation departments. Finance isn’t slow because computers are slow. It’s slow because databases don’t agree — and no single layer has contextual understanding of what data actually means.

That’s the structural lens I began using: the real bottleneck is not transaction throughput; it’s semantic coordination. Systems can move numbers quickly. They struggle to understand relationships between those numbers.

This is why even the most advanced financial systems still depend on manual audits, compliance layers, and institutional trust hierarchies. Regulations exist because data alone is not trusted. Institutions like clearing houses and custodians exist because shared truth is fragile.

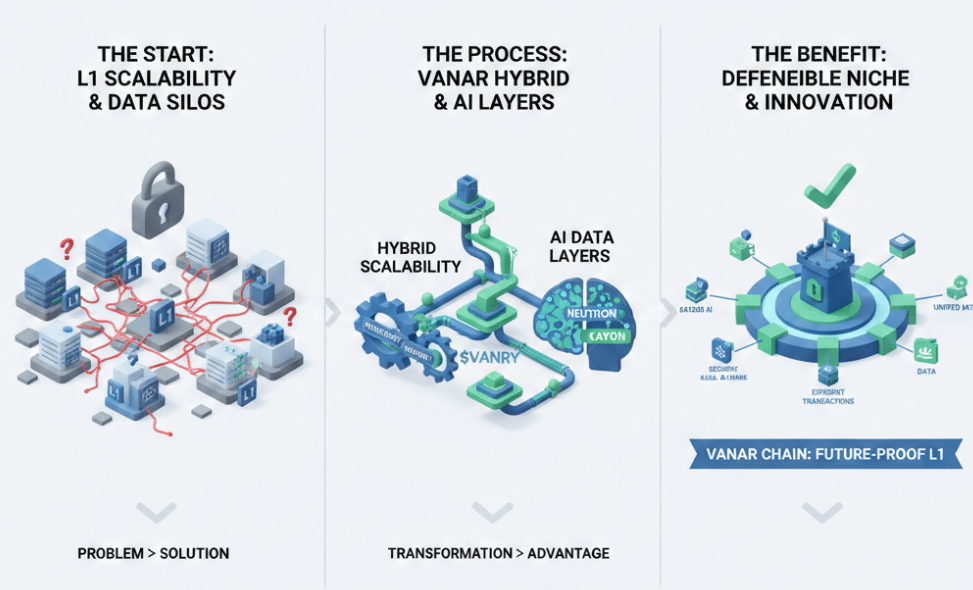

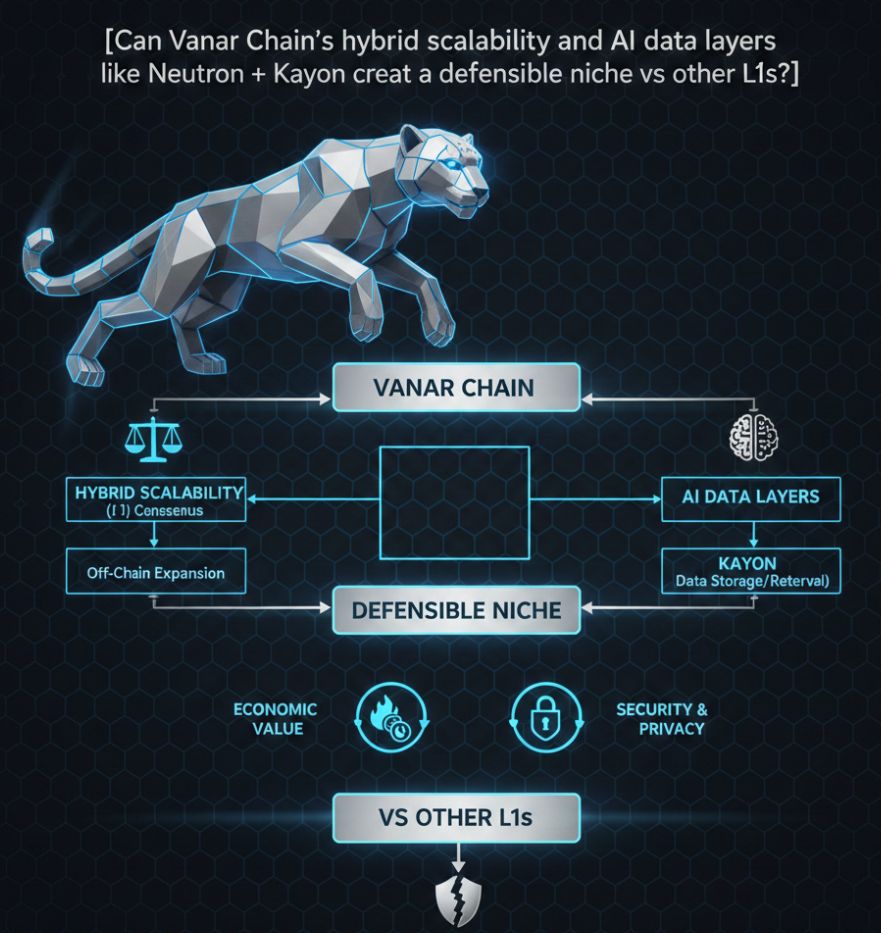

When people praise networks like Ethereum for decentralization or Solana for raw speed, they are often discussing performance metrics. Ethereum optimized for security and programmability. Solana optimized for execution speed. Both solve real constraints. But neither directly solves semantic fragmentation — the problem of contextual data coherence across layers.

Ethereum’s ecosystem, for example, is powerful but layered. Execution happens on one layer, scaling on another, data availability elsewhere. It works, but coordination complexity grows. Solana achieves impressive throughput, yet high performance still does not equal contextual intelligence. Speed without interpretive structure can amplify errors faster. ⚡

The deeper issue is incentive alignment. Validators secure networks. Developers build applications. Users generate activity. But no structural layer ensures that transaction data is contextually meaningful beyond execution.

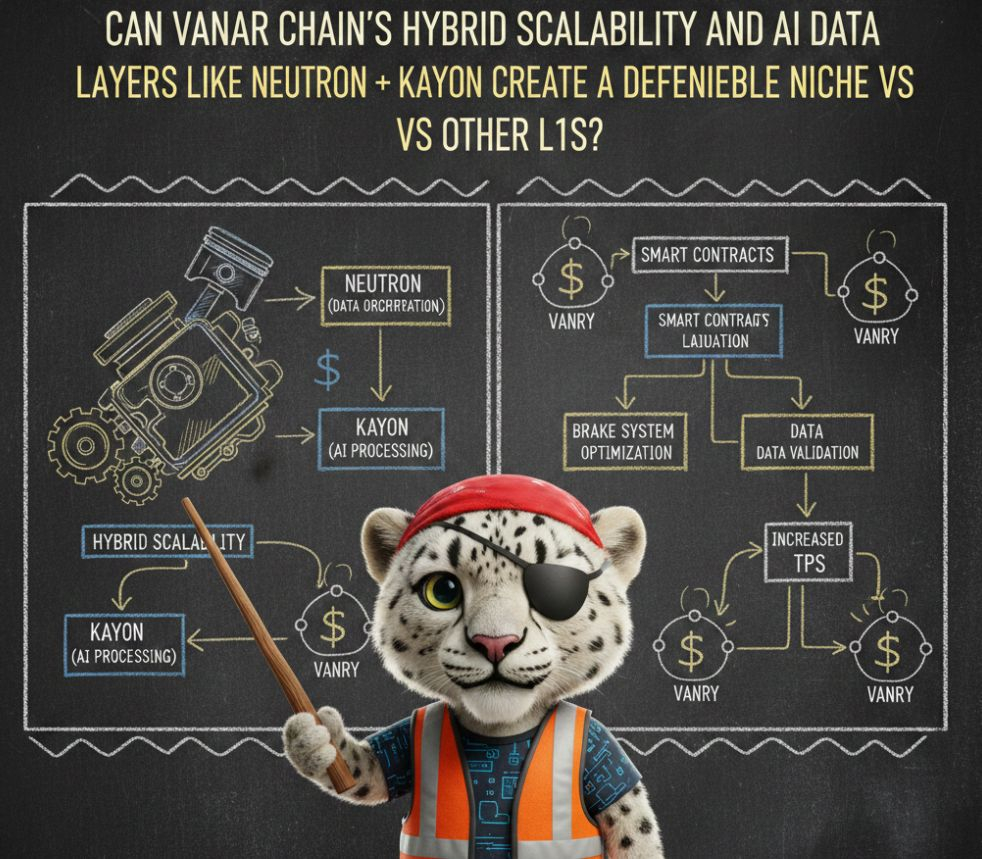

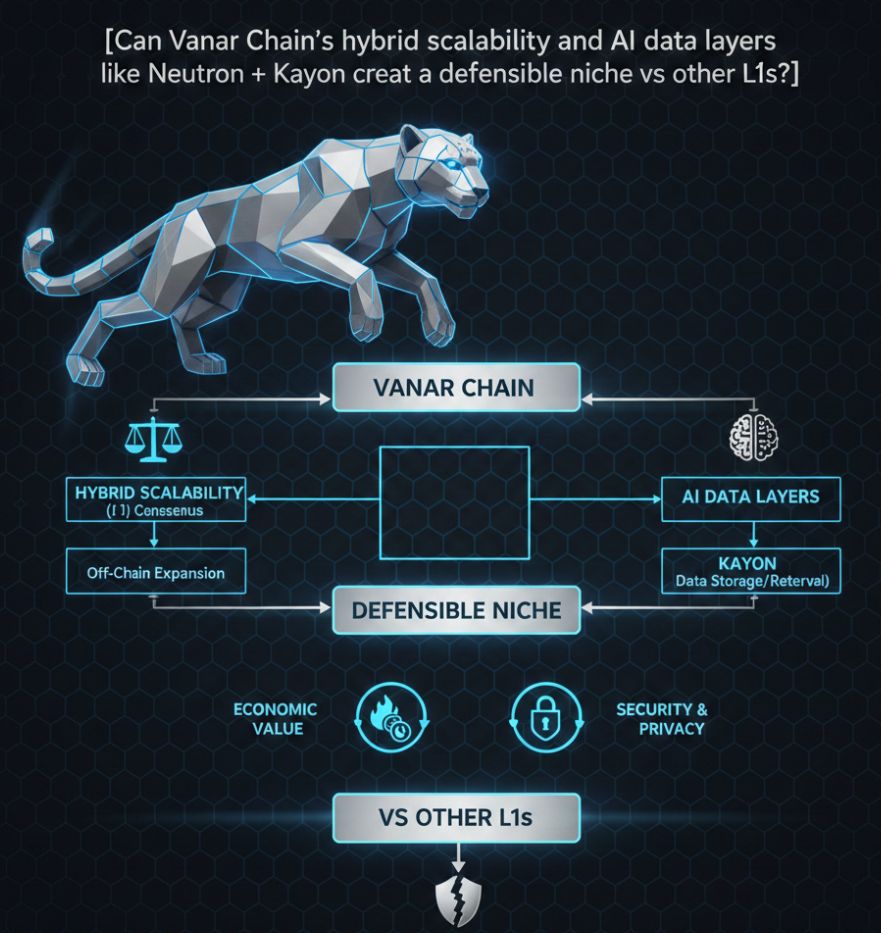

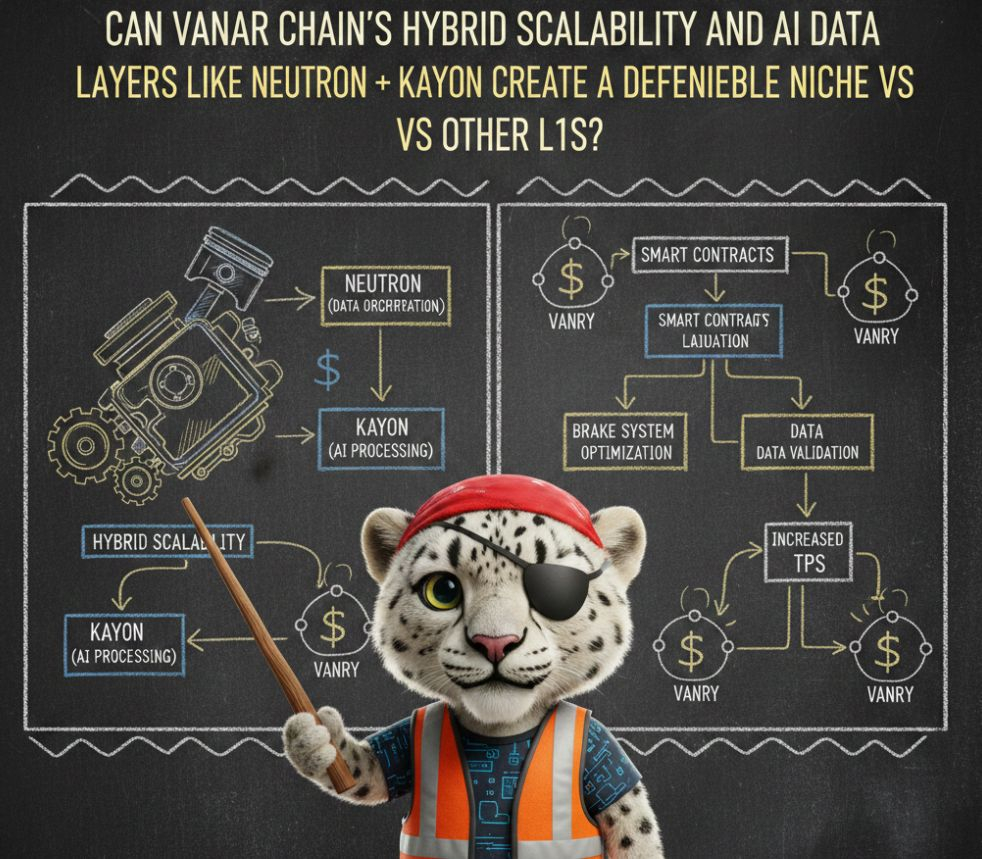

This is where Vanar Chain’s architecture becomes interesting — not because it claims to be faster, but because it attempts to combine hybrid scalability with AI-driven data layers like Neutron and Kayon.

Hybrid scalability, in simple terms, means not forcing one method of scaling onto all activity. Instead of making every transaction compete in the same lane, different workloads can be distributed intelligently. It is less like building a wider highway and more like designing traffic systems that recognize vehicle type and route accordingly. 🚦

But scalability alone would repeat the same mistake other networks made — assuming throughput solves fragmentation. The structural pivot lies in the AI-native data layers.

Neutron is positioned as a semantic memory layer. Rather than storing raw transaction data only, it organizes relationships between data points. Kayon functions as an intelligence layer capable of interacting with that structured data. The ambition is not merely to record activity, but to interpret it.

To explain this clearly, imagine two systems.

System A stores every receipt you’ve ever received, but only as isolated PDFs.

System B stores receipts while also categorizing them by merchant type, spending behavior, time pattern, and contextual tags.

System A gives you data. System B gives you structured meaning.

That difference is where defensibility may emerge. 🧠

Most L1 networks treat data as a byproduct of transactions. Vanar attempts to treat data as a first-class structural component. If successful, that could create a niche where applications rely not just on execution but on contextual intelligence embedded within the chain itself.

This matters especially for real-world asset tokenization, identity frameworks, gaming economies, and compliance-heavy sectors. These domains require more than transaction finality. They require historical memory and interpretive continuity.

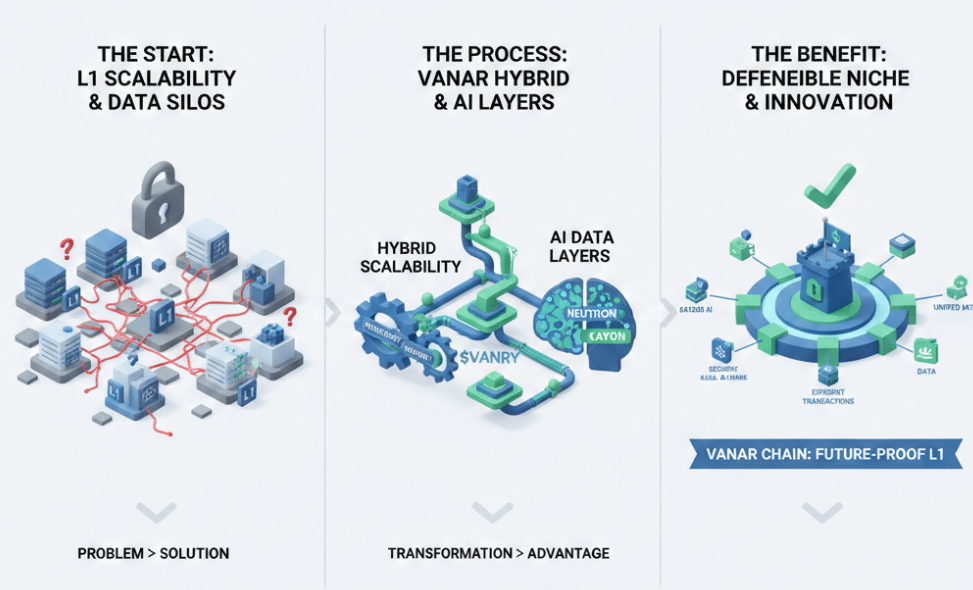

For clarity, a useful visual framework would compare three architectural approaches:

One column showing execution-first chains focused on throughput.

One column showing modular chains separating execution and data availability.

One column showing a hybrid-scalable chain with embedded semantic layers.

The table would highlight differences in how each handles context persistence, not just TPS. That visual matters because it shifts the evaluation metric from speed to structural coherence.

A second helpful visual would be a layered diagram: base execution layer, hybrid scaling mechanism, Neutron as structured data memory, and Kayon as intelligence interface. This would demonstrate how interpretation sits above execution rather than outside it.

However, there are real tensions.

Embedding AI-oriented data layers raises governance questions. Who defines semantic structure? How are biases mitigated? Does contextual interpretation introduce new attack surfaces? Data richness increases utility but also complexity. More structure means more potential fragility. 🧩

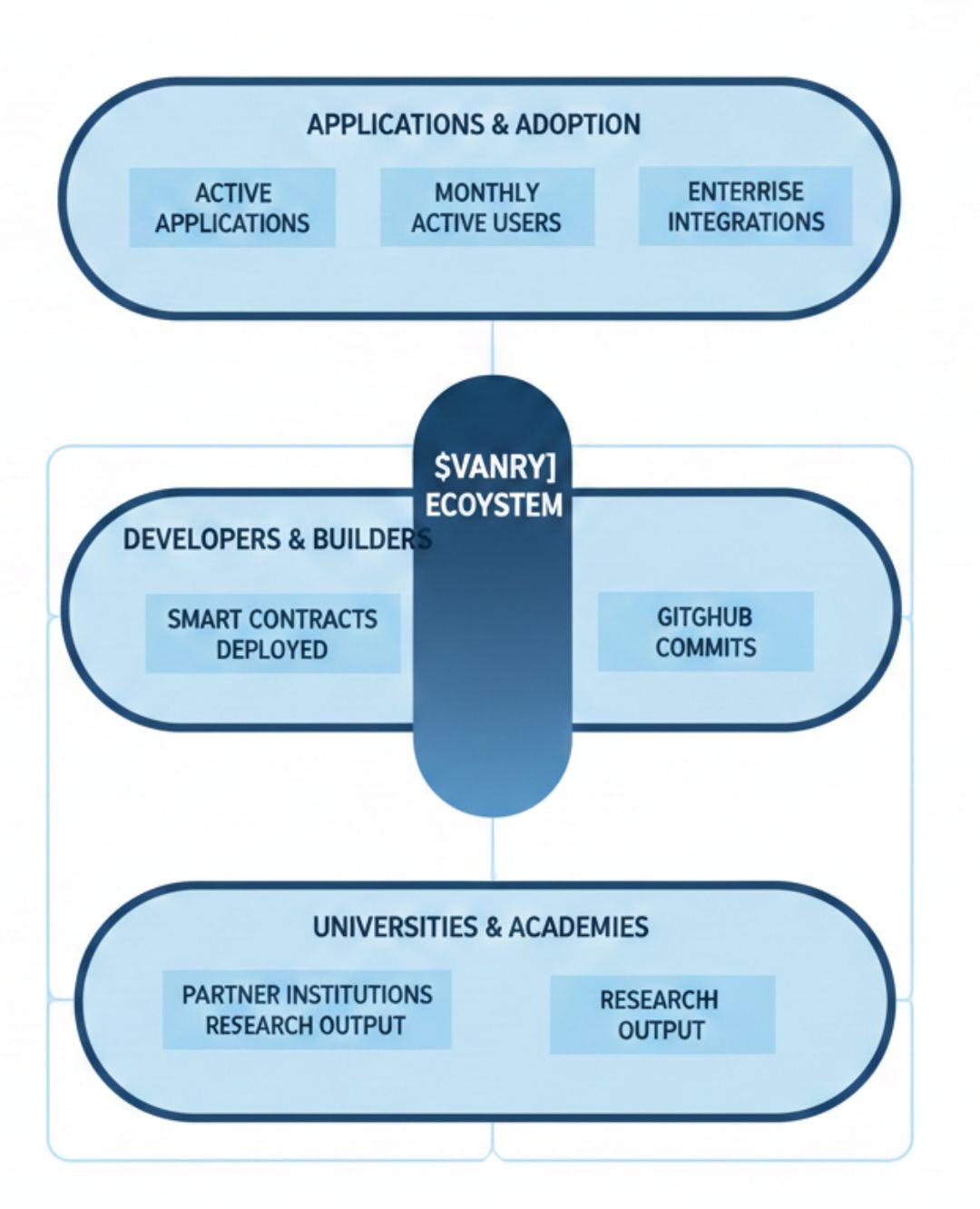

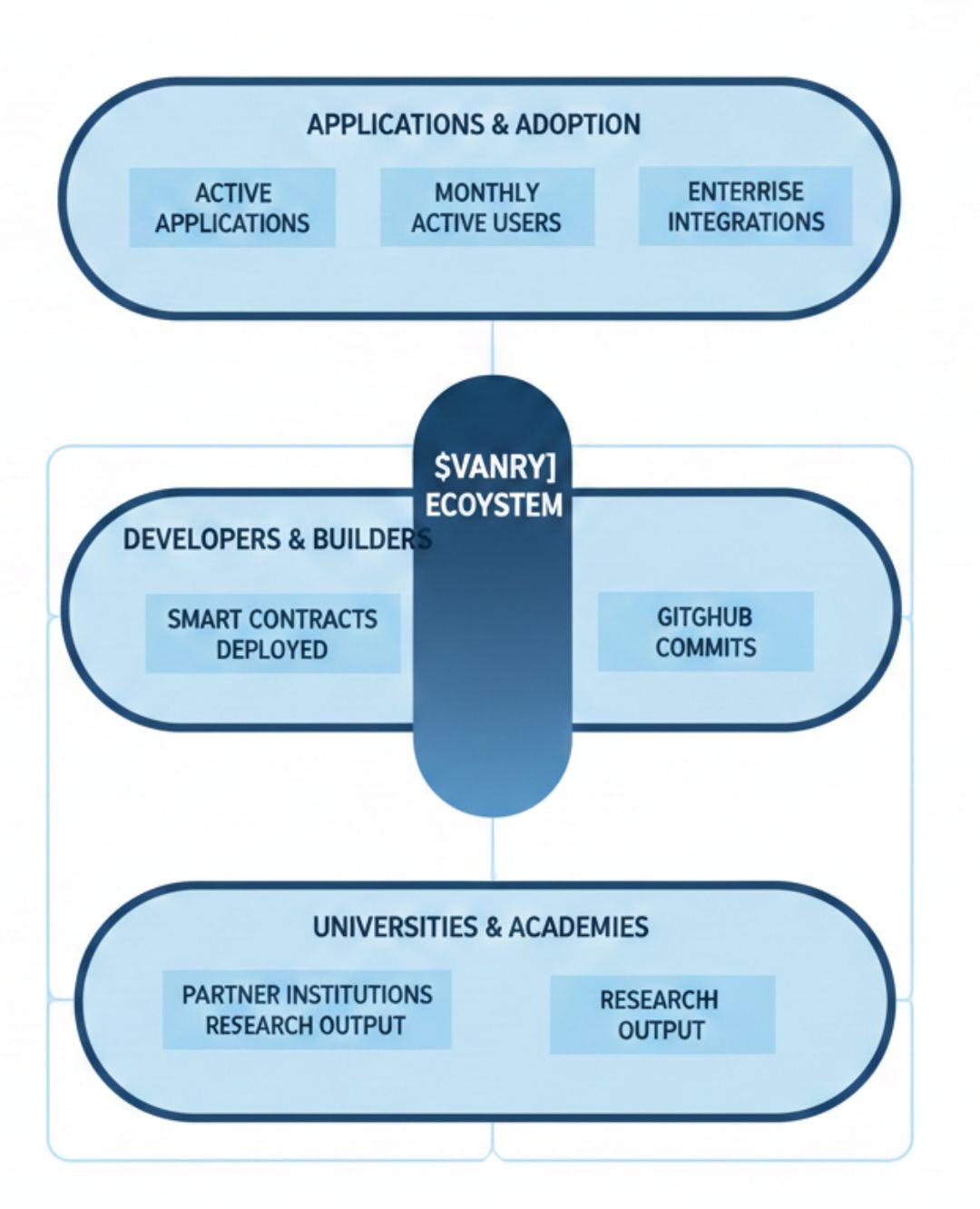

There is also adoption risk. Developers are accustomed to execution-centric environments. Asking them to build with semantic layers requires tooling maturity and documentation clarity. Without strong developer ergonomics, the architecture risks remaining underutilized.

Token mechanics also intersect with defensibility. If VANAR token utility aligns with securing not only transactions but data validation and memory integrity, that expands its functional role. But if token incentives remain detached from semantic layer performance, structural cohesion weakens.

The deeper question is whether markets value contextual coherence enough to shift toward such architectures. Most capital still chases speed and liquidity metrics. But institutional adoption, especially in regulated sectors, may demand embedded interpretive layers rather than external analytics tools. 🏛️

Yet integration is fragile. AI systems evolve rapidly. Blockchain systems prioritize stability. Merging them means balancing adaptability with consensus rigidity. Too much flexibility weakens determinism. Too much rigidity limits intelligence.

The niche, if it exists, will not be won by claiming superiority. It will depend on whether real applications begin relying on semantic continuity at the base layer rather than treating it as optional middleware.

When my bank app froze, the issue wasn’t that the transaction failed. It was that the system could not reconcile meaning across silos. If future networks can embed context alongside execution, they may reduce that fragmentation.

But here is the unresolved tension: if intelligence becomes embedded at protocol level, who ultimately governs interpretation — code, validators, or the institutions that depend on it? 🤔

@Vanarchain #vanar $VANRY #Vanar