Most performance discussions in crypto still orbit around familiar numbers. Transactions per second. Block time. Finality. These metrics matter, but they rarely describe what users actually experience. Users don’t feel TPS. They feel responsiveness. They feel whether the interface reacts immediately or hesitates just long enough to create doubt.

That distinction becomes interesting when looking at Fogo.

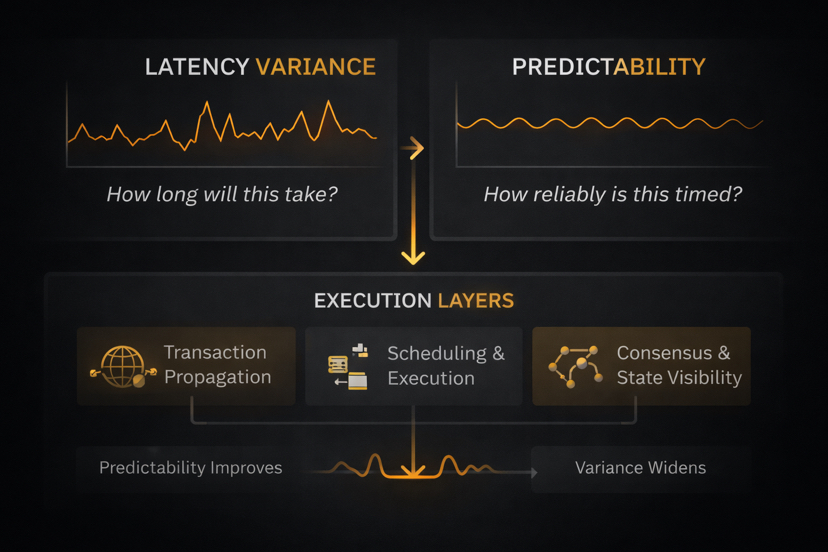

A chain can advertise high throughput and still feel sluggish in practice. This usually happens when latency variance creeps into the system. Variance is the hidden layer of performance. It’s not about average speed, but consistency. If one transaction confirms in 400 milliseconds and the next in 4 seconds, the chain is technically fast yet psychologically unstable.

What Fogo appears to be optimizing is not just raw execution, but predictability.

Predictability is rarely discussed because it’s harder to visualize. Throughput produces impressive charts. Predictability produces fewer complaints. But from a systems perspective, variance reduction is often more valuable than peak improvement. Markets, applications, and user behavior adapt more easily to consistent environments than to fast-but-erratic ones.

In practical terms, perceived speed is largely a latency problem.

Latency here is not simply block time. It’s the sum of several layers: transaction propagation, scheduling, execution, consensus, and state visibility. Any bottleneck across these layers stretches the user experience, regardless of theoretical TPS.

This is where architectural design choices start to matter more than headline metrics.

Parallel execution, inherited from the SVM model, plays a role, but only under specific conditions. Transactions must avoid conflicting writes. Developers must structure state carefully. Validators must exploit hardware efficiently. Parallelism is capacity, not guarantee.

If conflict patterns dominate, parallel chains behave sequentially.

Fogo’s design posture suggests a focus on minimizing sources of contention and delay. Faster block cadence reduces waiting intervals. Optimized validator coordination reduces propagation jitter. Tighter scheduling reduces idle compute cycles. None of these mechanisms independently create “speed.” Together, they compress uncertainty.

And uncertainty is what users interpret as slowness.

A stalled transaction isn’t merely delayed computation. It’s a break in expectation. Human perception is sensitive to inconsistency. Even small delays feel amplified when they are unpredictable. In trading environments, this amplification becomes economic. Delays distort fills, widen slippage, and reshape behavior.

This reframes the idea of performance.

Performance isn’t how fast the chain can go under ideal conditions. It’s how stable execution remains when conditions degrade. Stress resilience becomes more relevant than peak benchmarks. Consistency under load becomes more valuable than theoretical ceilings.

From this perspective, Fogo is less a speed experiment and more a variance experiment.

Reducing latency variance requires trade-offs. Hardware expectations increase. Validator sets may become more curated. Geographic topology may tighten. These are not incidental details; they are structural consequences of optimizing predictability.

Every performance gain shifts system incentives.

Lower variance benefits latency-sensitive workloads: trading, automation, real-time interactions. It also changes competitive dynamics. When randomness declines, edge extraction moves elsewhere. Sophisticated participants adapt quickly. Retail users primarily benefit when unpredictability itself was the main tax.

Speed, then, becomes secondary.

What users interpret as “fast” is often simply “reliable timing.” When actions consistently map to outcomes without visible hesitation, the system feels fast regardless of absolute metrics. Perceived performance is a behavioral phenomenon emerging from architectural stability.

This is why two chains with similar TPS can feel completely different.

One may deliver impressive averages but inconsistent confirmation patterns. The other may produce lower peaks yet tighter latency distribution. Users typically prefer the second environment, even if they cannot articulate why.

Predictability compounds.

Developers design more confidently. Users transact more freely. Market behavior stabilizes. Systems that reduce uncertainty tend to attract workflows that depend on precision rather than speculation. Over time, reliability becomes a form of performance moat.

Seen through that lens, Fogo’s emphasis on execution discipline, scheduling efficiency, and latency compression reads less like marketing and more like infrastructure strategy.

Because in distributed systems, the question is rarely “how fast can it go?”

The more durable question is:

“How often does it hesitate?”