The first time I heard someone say “AI needs memory on-chain,” I almost dismissed it as another narrative wave. We have heard about faster chains, cheaper fees, smarter contracts. Memory sounded abstract. But the more I looked at VanarChain, the more I realized this is not about storage in the usual sense. It is about giving AI a place to remember in a way that can be verified.

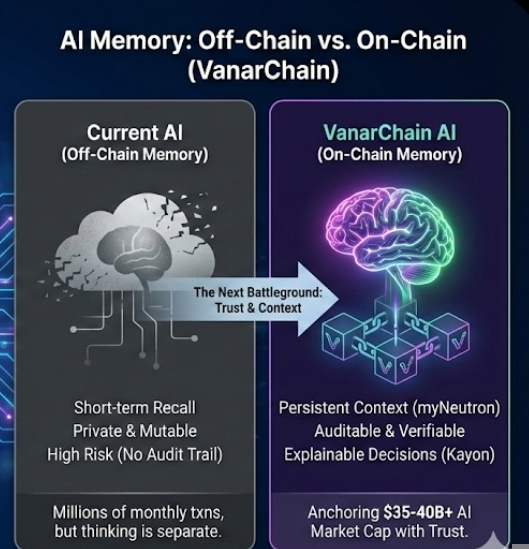

Most AI today has short-term recall. A chatbot remembers your last few messages, maybe a session, then it forgets. Underneath that convenience is a problem. If AI is going to manage assets, automate payments, or execute agreements, it cannot rely on memory that disappears or sits in a private database. It needs a steady foundation. That is where VanarChain is positioning itself, not as just another Layer 1, but as infrastructure where intelligence keeps context.

On the surface, VanarChain looks familiar. It runs as an EVM-compatible chain. Transactions settle like any other network. Fees are paid in VANRY. But underneath, there is a focus on persistent memory layers like myNeutron, which is designed to store semantic context rather than just raw bytes. In plain terms, that means instead of saving only data, the chain can anchor meaning and relationships. If an AI agent interacts with a wallet, signs a contract, or executes a flow, that context can be written in a way that is traceable.

That detail matters more than it sounds. As of January 2026, the AI sector’s token market cap is hovering around 35 to 40 billion dollars, depending on the day, and volatility remains high. Meanwhile, on-chain AI agent transactions across multiple networks have crossed into the millions monthly. The surface growth looks impressive, but most of those agents still depend on off-chain memory. They act on-chain, but they think somewhere else. That separation creates risk.

VanarChain is betting that this gap becomes crypto’s next battleground. Not speed. Not throughput alone. Memory.

Understanding that helps explain why their recent push into cross-chain availability, starting with Base, is significant. Base currently processes hundreds of thousands of daily transactions, with active addresses frequently exceeding 300,000 per day during peak cycles. If Vanar’s memory layer can integrate into that environment, it is no longer a niche experiment. It becomes embedded where liquidity and users already exist.

When I first looked at Vanar’s Flows system, what struck me was how ordinary it felt. You define conditions, connect triggers, automate outcomes. It sounds like Zapier for blockchain. But underneath, it ties into Kayon, their reasoning engine, which aims to make AI decisions explainable. That is not a small detail. In finance, explainability is not a luxury. If an AI liquidates a position or reallocates funds, users will ask why.

On the surface, you see an automated action. Underneath, there is logic stored, memory referenced, reasoning recorded. That layering is subtle but powerful. It enables something new: AI that does not just act, but can show its thinking trail. Early signs suggest that as regulators in the US and Europe look closer at automated financial systems in 2026, this type of transparency may become less optional and more expected.

Of course, there are counterarguments. Some developers argue that memory should stay off-chain because it is cheaper and faster. Storing data on-chain has always been expensive. Even with optimized designs, every byte has cost. That remains true. If VanarChain scales memory-heavy applications, fee pressure could increase. And if usage does not materialize, the memory narrative could fade like others before it.

But the trade-off is about trust. Off-chain memory is private and mutable. On-chain memory is slower but auditable. If AI is going to handle billions in value, which environment feels steadier? We already see institutions testing tokenized real-world assets that require audit trails stretching years, not days. A chain that anchors both transactions and contextual memory starts to look less like an experiment and more like infrastructure.

Meanwhile, VANRY’s market behavior reflects this tension. Over the past year, it has seen cycles of accumulation during AI narrative spikes and pullbacks during broader market corrections. In early 2026, daily trading volumes have frequently ranged in the tens of millions of dollars. That liquidity tells you there is attention, but attention alone is not durability. The real test is whether developers build sustained applications on top of this memory layer.

That momentum creates another effect. If one network proves that AI memory anchored on-chain reduces fraud, improves automation reliability, or simplifies compliance, other chains will respond. We have seen this pattern before. When DeFi protocols proved that automated market makers could replace order books for certain use cases, the design spread. When rollups showed that scaling through Layer 2 could work, the ecosystem followed.

Memory may follow the same path. Quiet at first. Underestimated. Then standard.

There is also a deeper shift happening underneath crypto markets right now. The conversation is moving from infrastructure as speed to infrastructure as intelligence. In 2021, the race was about transactions per second. In 2023 and 2024, it was about modular design and rollups. In 2026, as AI agents start to execute trades, rebalance portfolios, and manage DAO treasuries, the question becomes different. Where does the intelligence live, and who can verify it?

VanarChain is not the only project exploring this space, but it is one of the few making memory a central theme rather than a side feature. That focus gives it texture. It feels earned rather than reactive. If this holds, it could redefine how we think about smart contracts. They may evolve from static rule sets to dynamic agents with long-term context.

Still, uncertainty remains. Developer adoption is uneven. Competing AI chains are emerging. Broader market cycles can drown out nuanced infrastructure stories. And users may not immediately care where AI memory sits as long as apps feel smooth.

But history suggests that what feels invisible at first often becomes foundational. DNS was invisible. Cloud storage was invisible. Even blockspace itself was invisible until fees spiked.

Memory is like that. Quiet. Underneath. Holding everything together.

If AI is going to manage capital at scale, the network that controls not just execution but remembered context may quietly control the future of crypto.