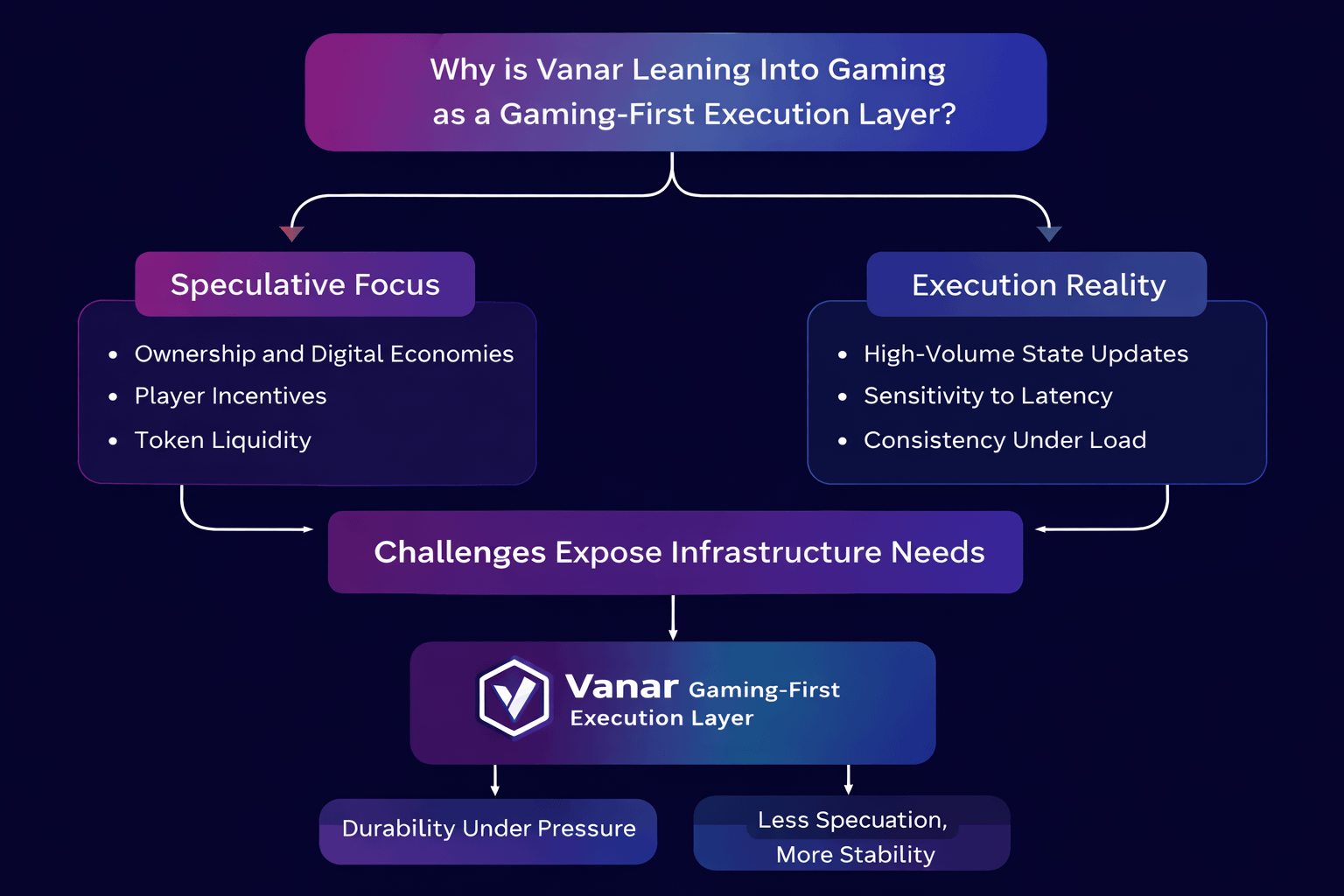

I’ve been following blockchain gaming conversations for a while now, and I keep noticing the same pattern. Most discussions stay on the surface. People talk about player ownership, tokenized assets, digital economies, interoperable worlds. The narrative usually sounds clean and optimistic: if players truly own their assets and can move them freely, a new kind of gaming economy naturally emerges.

That part is easy to imagine

What’s harder and what gets less attention is the environment underneath those ideas.

From where I stand, gaming isn’t just another category of decentralized application. It behaves differently. It stresses infrastructure differently. It doesn’t look like a simple asset transfer system or a DeFi protocol. It looks more like a live service running continuously, reacting to thousands of small actions every second.

When someone plays a game, they’re not making one transaction and leaving. They’re triggering state changes constantly. Micro-updates. Interactions that depend on other interactions. Events that have to remain synchronized, especially in multiplayer environments. And all of that has to feel seamless.

That’s where theory meets reality.

In demos, many systems look fine. Testnets run smoothly. Carefully staged environments handle scripted interactions without issues. But production is different. Production is messy. Players behave unpredictably. Traffic spikes at inconvenient times. Updates roll out mid-season. Indexers fall slightly behind. A tiny design shortcut suddenly interacts badly with a surge in demand.

And systems don’t usually break at peak performance. They degrade quietly at coordination points.

Gaming amplifies this problem. Multiplayer environments introduce synchronization requirements. Digital assets introduce persistence. Competitive mechanics introduce fairness constraints. A slight delay in state propagation might be harmless in a simple transfer app. In a game, it can change outcomes. It can frustrate players. It can even create exploit windows.

Small tolerances become big problems over time.

One thing I’ve learned from studying complex systems is that early assumptions harden faster than we expect. If a network begins as a general-purpose chain, that flexibility seeps into everything tooling, governance decisions, validator expectations, fee models. Later, trying to pivot toward something state-heavy and latency-sensitive isn’t just a parameter change. It becomes structural.

Execution models may need to shift. Fee mechanics may need adjustment. Indexing layers may require redesign. Validator incentives may need to evolve. Each of those changes carries friction. Each introduces new coordination risk.

That’s why Vanar leaning into gaming in 2026 feels less like chasing a trend and more like narrowing focus early.

When a network positions itself as gaming-first, it’s making quiet commitments. It’s saying that transaction patterns will be evaluated through the lens of interactive workloads. That latency matters. That asset lifecycle management isn’t optional. That developer tooling must account for repetitive, state-heavy logic rather than occasional transfers.

Specialization isn’t about hype. It’s about constraint.

And in engineering, constraint isn’t weakness. It’s clarity.

A system optimized for gaming might prioritize predictable execution over broad composability. It might shape its fee mechanics to reduce friction for frequent in-game actions instead of occasional high-value transfers. It might invest more heavily in middleware and developer tools tailored to digital asset interactions.

Every one of those choices narrows the design space. But over years, those narrowed decisions compound into identity.

There’s always a trade-off. Adapted systems carry legacy assumptions. They often rely on compatibility layers. Those layers work — until complexity starts stacking. And in distributed systems, complexity isn’t abstract. It translates directly into maintenance overhead and wider failure surfaces.

On the other hand, a specialized system may sacrifice some flexibility. The cost of specialization is optionality. But the reward can be internal coherence.

Gaming forces discipline. It exposes infrastructure weaknesses quickly. If confirmation feels inconsistent, players notice immediately. If asset updates are unreliable, developers feel the friction. If tooling isn’t mature, support burdens escalate fast.

It’s not glamorous work. Most of it is invisible.

For years, the loudest story in blockchain gaming revolved around speculation — token price cycles, early adopter incentives, growth projections. But the quieter, more difficult challenge has always been operational durability. Can the infrastructure survive real usage? Can it handle multiple update cycles without degrading? Can it evolve without accumulating fragility?

Because real games don’t launch once and freeze in time. They patch. They rebalance. They expand. Player behavior shifts. Asset standards evolve. Data grows. And every update adds stress.

Durability isn’t about a successful launch week. It’s about surviving year three.

When a network leans into gaming, it accepts that these pressures will shape everything — validator performance, state growth management, data availability strategies, developer experience. Those choices either reinforce resilience or slowly expose structural weaknesses.

Markets will move. Narratives will rotate. Capital will chase whatever looks exciting in the moment.

But underneath all of that, one question quietly determines whether the strategy holds:

Can the execution layer sustain years of unpredictable, state-heavy interaction without accumulating fragility faster than it accumulates value?

If it can, the positioning becomes durable regardless of narrative cycles.

If it can’t, speculation won’t save it.

From my perspective, that’s what makes Vanar’s gaming focus interesting in 2026. Not the theme. Not the trend.

The commitment to execution under pressure.