When I first started paying attention to AI showing up on-chain, what unsettled me wasn’t the speed or the demos. It was the quiet realization that these systems were no longer just tools. They were beginning to act. And once something can act, the question isn’t how impressive the stage looks, it’s who wrote the rules.

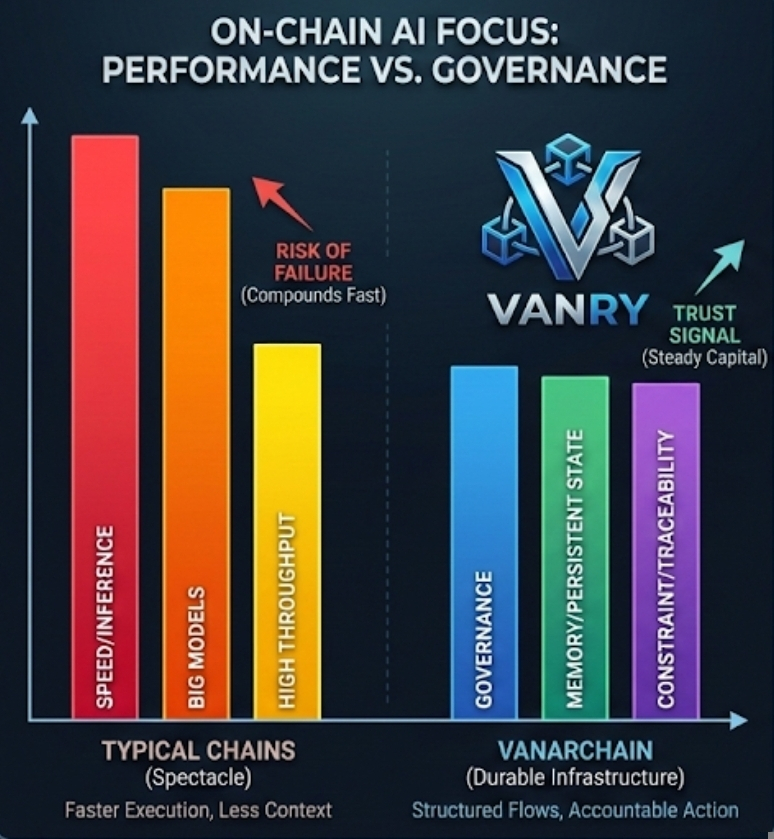

That’s where VanarChain started to feel different to me. Not louder. Not flashier. Just more deliberate. Most networks courting AI energy right now and they are obsessed with performance theater. Faster inference. Bigger models. Higher throughput. But underneath that noise, Vanar seems focused on something less glamorous and more durable: governance, memory, and constraint.

The timing matters. In early 2026, on-chain AI activity is no longer theoretical. Autonomous trading bots now account for an estimated 12 to 18 percent of DeFi volume depending on the week, based on aggregated DEX analytics. That number isn’t impressive because it’s big. It’s impressive because it keeps recurring. These agents don’t sleep, don’t panic, and don’t forget.

They act based on prior state. And that persistence creates a new kind of risk.

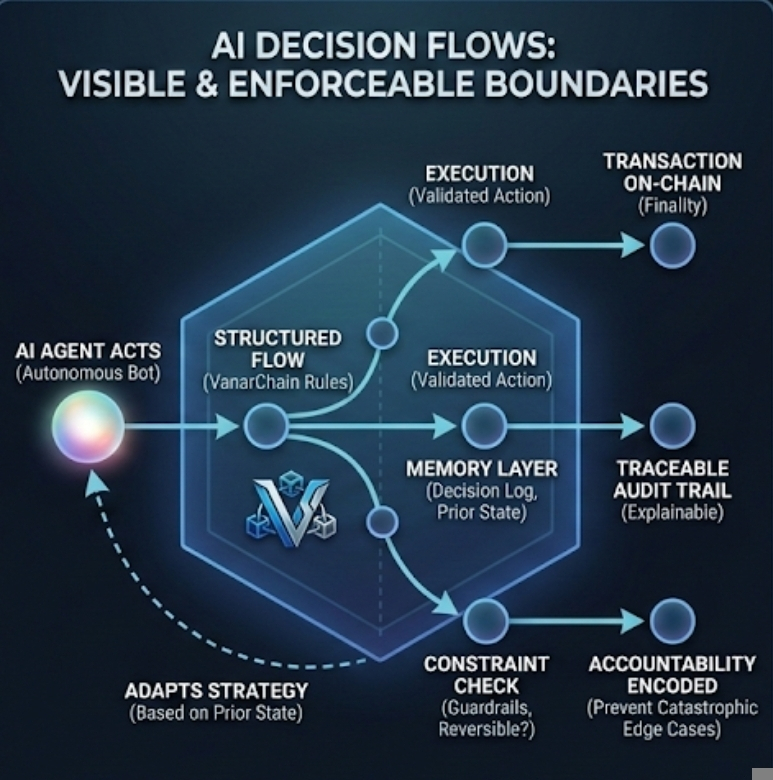

On the surface, most chains treat AI agents like very fast users. They submit transactions. They trigger contracts. They pay gas. Underneath, though, those agents are carrying context across time. They remember past outcomes. They adapt strategies. That memory layer exists mostly off-chain today, tucked into private databases or opaque services. Which means when something goes wrong, the chain can see the action but not the reasoning.

Understanding that helps explain why Vanar leans so heavily into structured flows and persistent state. Instead of asking how to execute faster, it asks how decisions are formed, recorded, and constrained. A flow, in simple terms, is a mapped sequence of logic that defines what an agent can do, when it can do it, and under which conditions it must stop or explain itself. On the surface, it looks like orchestration. Underneath, it’s accountability encoded as infrastructure.

This distinction matters more than it sounds. In January 2026 alone, three high-profile DeFi incidents involved AI-driven strategies that behaved exactly as coded but produced outcomes no one wanted. In one case, an autonomous liquidity manager amplified volatility during a low-liquidity window, extracting value but destabilizing the pool. The chain executed flawlessly. The intent was nowhere to be found.

Vanar’s approach suggests a different assumption. That if AI is going to act on-chain, its decision space needs boundaries that are visible and enforceable. Not just permissions, but memory. Not just execution, but traceability. When an agent makes a move, the question shouldn’t only be whether it was valid. It should be whether it was expected, justified, and reversible.

Of course, this creates friction. Encoding rules slows things down. Early benchmarks from Vanar’s public testing show transaction finality that’s steady rather than explosive, with throughput sitting comfortably below the headline numbers chains love to advertise. But context matters. Those numbers reflect flows that carry metadata, checkpoints, and decision logs. You’re not just moving tokens. You’re moving responsibility.

There’s a counterargument worth taking seriously. Some builders argue that constraints kill innovation. That AI systems learn best when unconstrained, adapting freely to environments. That writing rulebooks too early risks freezing the future. I get that instinct. It mirrors early debates around financial regulation. But what’s different here is speed. AI agents iterate orders of magnitude faster than humans. Without guardrails, mistakes don’t stay small.

Meanwhile, the market is quietly signaling what it values. Despite the broader altcoin rotation in February 2026, infrastructure tokens tied to compliance, auditability, and control have shown steadier drawdowns than pure speculation plays. It’s not a bull signal. It’s a trust signal. Capital tends to linger where uncertainty is at least legible.

That momentum creates another effect. Once rules are explicit, coordination becomes possible. Multiple agents can operate within shared constraints without tripping over each other. Think less swarm, more traffic system. Not because it’s elegant, but because it reduces catastrophic edge cases. Vanar’s flows start to look less like developer tools and more like civic infrastructure for machines.

What struck me while digging deeper is how unambitious this sounds on the surface. No promises of dominance. No claims of replacing everything. Just a steady insistence that agency without accountability doesn’t scale. That’s a hard sell in a market addicted to spectacle. But it’s also how durable systems tend to be built.

There are risks here too. Encoding rules assumes we know which behaviors matter. We don’t. Early rulebooks often miss edge cases or bake in biases. And if governance around those rules ossifies, adaptation slows. Vanar’s challenge will be keeping its foundations firm without making them brittle. Early signs suggest this is an active concern rather than an afterthought, but it remains to be seen how it plays out under stress.

Zooming out, this fits a broader pattern. As AI systems move from assisting humans to acting alongside them, infrastructure is shifting from enabling possibility to enforcing responsibility.

The same way financial rails evolved from speed to settlement guarantees, AI rails are beginning to value memory, audit, and restraint. Not because it’s idealistic, but because the cost of failure compounds faster now.

If this holds, the next wave of on-chain innovation won’t be defined by who can make AI act first. It will be defined by who can make it answer for what it does.

And that’s the part that sticks with me. In a space obsessed with stages, Vanar is spending its energy on the rulebook. Quietly. Underneath everything else. Because once the actors arrive, it’s already too late to start writing the rules.