Today, February 10, 2026, I want to explore a topic that has quietly been reshaping how we think about AI agents and long-running workflows. I’m Dr_MD_07, and today I’ll explain how Vanar Chain’s integration with Neutron a persistent memory API changes the way agents operate, making them more durable and knowledge-driven over time. This is about more than storing data; it’s about building memory that survives restarts, shutdowns, and even complete agent replacement, letting intelligence persist beyond individual instances.

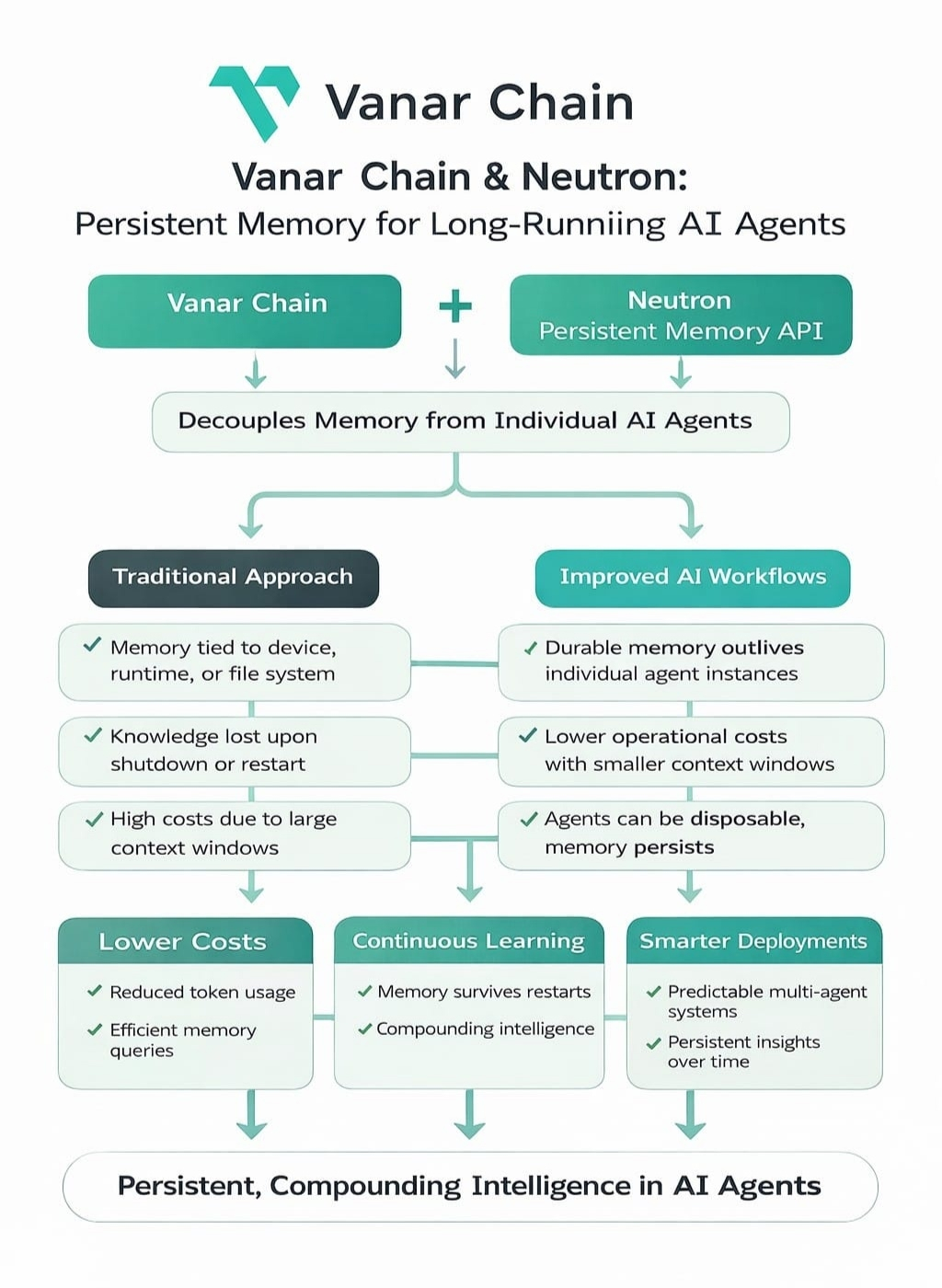

Traditionally, AI agents tie memory to a device, runtime, or file system. Once the process stops, much of that knowledge disappears. With Neutron, this model shifts. Memory is decoupled from the agent itself, meaning an instance can shut down, restart somewhere else, or be replaced entirely, yet continue operating as if nothing changed. The agent becomes disposable, while memory becomes the enduring asset. This simple shift has deep implications for both developers and businesses relying on AI-driven workflows. Knowledge is no longer ephemeral; it compounds over time.

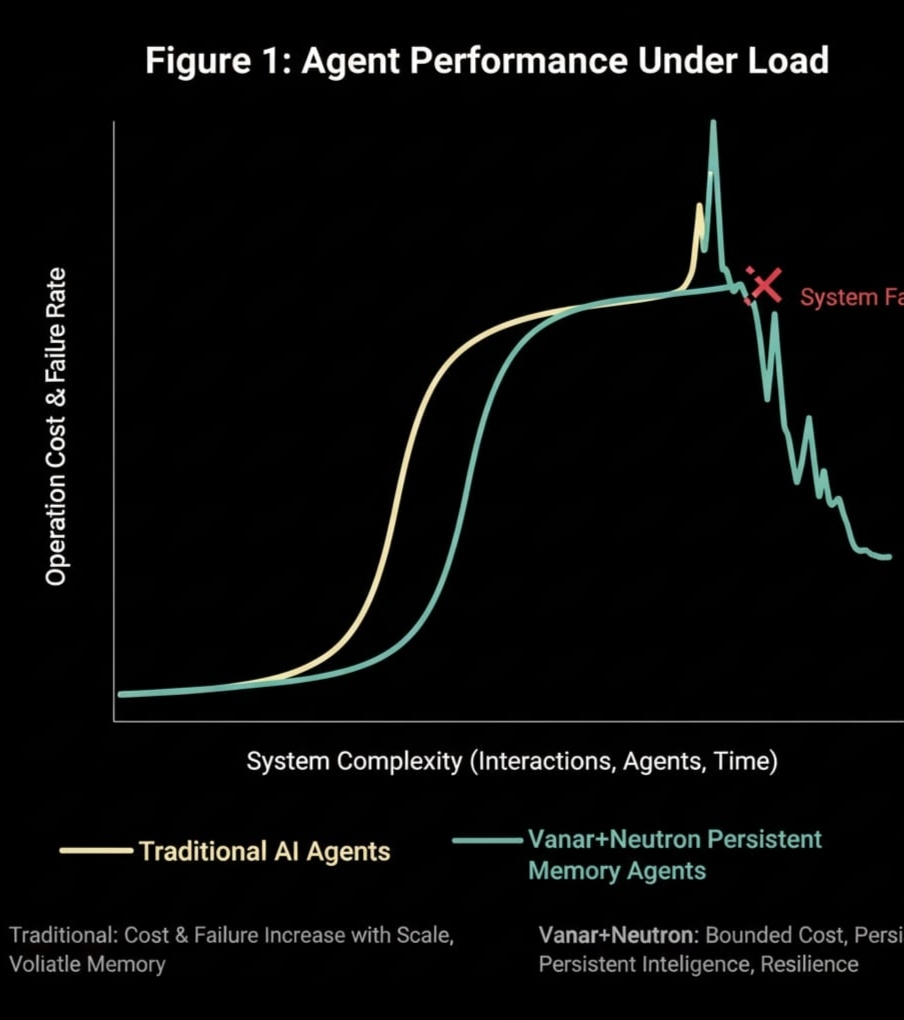

Neutron works by compressing what actually matters into structured knowledge objects. Instead of dragging a full history through every prompt which quickly becomes costly in tokens and unwieldy for context agents query memory like they query a tool. This makes interactions more efficient. Large context windows, which in traditional AI setups could balloon and raise operational costs, remain manageable. The result is not just cost reduction; it’s a system that behaves more like actual infrastructure than a series of experimental scripts. Background agents, always-on workflows, and multi-agent systems begin functioning predictably, without the constant overhead of resending historical data.

From a professional standpoint, this changes the economics of long-running agents. In traditional models, token costs and context size often grow linearly or even exponentially with time. With Neutron, agents maintain a persistent knowledge base that can be queried selectively, keeping both context windows and costs in check. For companies exploring AI automation, this matters. Persistent memory allows workflows to evolve naturally over days, weeks, or months without creating bottlenecks or forcing constant retraining. Teams can deploy agents that improve over time rather than repeating the same learning loops after each restart.

Vanar Chain provides the infrastructure that makes this durable memory feasible. Its modular, scalable architecture ensures that persistent knowledge isn’t confined to a single node or runtime environment. Data integrity and security remain central; the knowledge objects Neutron manages are verifiable and queryable, ensuring that agents operate on trustworthy information. For organizations considering long-term AI deployments, this combination of Vanar and Neutron removes many practical barriers. Processes that require continuity, like treasury management, cross-border compliance, or customer support, benefit directly from memory that survives disruptions.

Another practical advantage is compounding intelligence. In conventional setups, an agent’s learning often resets with every session or deployment. With Neutron on Vanar, memory accumulates insights over time. Patterns recognized in past interactions are available for future reasoning, allowing agents to provide more informed responses and predictions. This is especially valuable in environments where agents support multi-agent systems. When multiple instances share a persistent memory layer, knowledge transfer occurs automatically, improving coordination without manual intervention.

From my perspective as someone observing infrastructure trends closely, this is a subtle but powerful shift. AI workflows become more predictable and durable, more like traditional IT services with uptime guarantees and operational consistency. Developers no longer need to engineer around the limitations of volatile memory or oversized context windows. Instead, they can focus on designing smarter workflows, confident that the underlying memory layer will maintain continuity. This also makes experimentation more feasible; agents can be tested, replaced, or scaled without losing historical insight.

The combination of Vanar Chain and Neutron is gaining traction for precisely these reasons. While mainstream discussions often focus on model size or raw performance, the true bottleneck for practical deployments has often been memory and continuity. By making memory a first-class, durable feature, Vanar and Neutron shift the conversation toward persistent intelligence. This aligns with trends seen in 2026, where businesses increasingly expect AI to function as a reliable, continuous service rather than a one-off tool.

Ultimately, the real innovation here isn’t just technical it’s operational. Persistent memory on Vanar turns ephemeral AI agents into parts of a living system. Intelligence no longer depends on a single runtime or deployment cycle. Knowledge survives restarts, agents can be swapped without interruption, and workflows improve over time. For organizations, this means lower costs, reduced complexity, and systems that truly learn from their history. From a trader’s or developer’s perspective, that is a practical, measurable advantage that goes beyond the usual hype.

In summary, Vanar Chain’s integration with Neutron redefines what long-running agents can do. By separating memory from individual instances, compressing knowledge into queryable objects, and ensuring durability across restarts, the system makes persistent, compounding intelligence possible. Context windows remain manageable, costs stay controlled, and multi-agent workflows operate like real infrastructure. For 2026 and beyond, persistent memory on Vanar represents a new baseline for how AI agents learn, adapt, and support real-world operations.