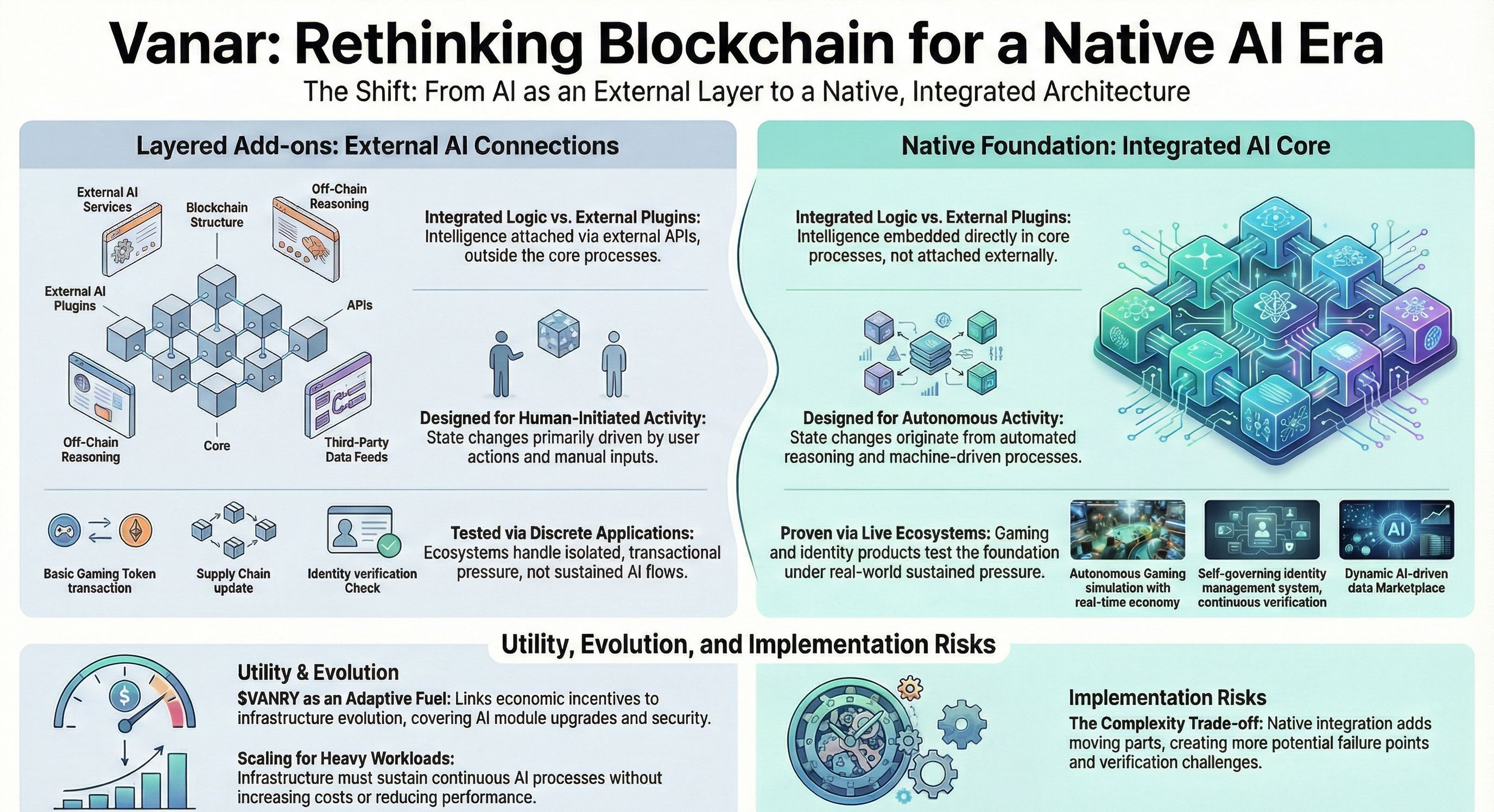

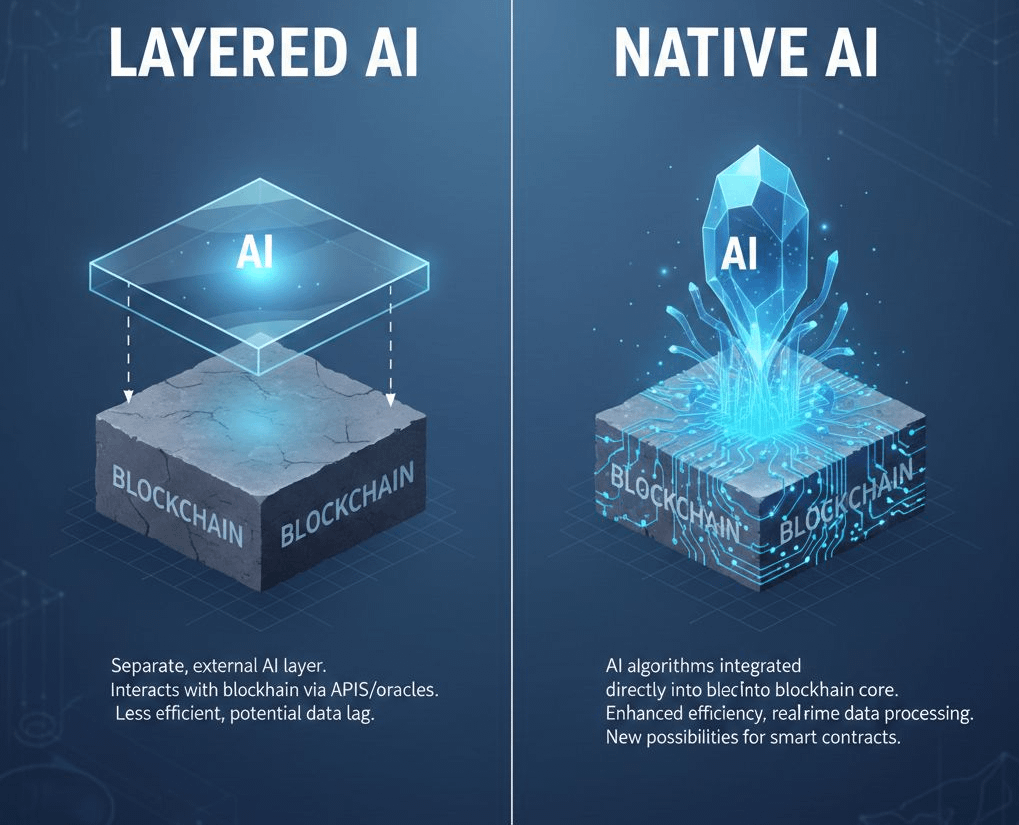

An important, yet subtle, observation about how AI is currently being integrated into most blockchains is that it often appears to be layered on top of the chain, rather than emerging from within it. It’s an extra layer. Useful, sometimes impressive, but not deeply rooted in the foundation.

Most established chains were originally built to process transactions in a predictable way. Consensus first. Throughput second. Everything else came later. So when AI entered the conversation, it was often attached through APIs, off-chain services, or data feeds that plug into smart contracts.

That works for lightweight use cases. But when AI systems need continuous data, frequent updates, and real-time feedback loops, I start to see the strain. Latency shows up. Costs increase if every small adjustment has to settle on-chain. Infrastructure that was designed for static code struggles with evolving models.

Over time, the mismatch becomes visible. The chain starts to limit the intelligence rather than support it.

Designing for AI From Day One

When I think about an AI-first mindset, I don’t think about features. I think about assumptions.

If I design a blockchain for AI from day one, I assume that machine-driven processes will be constant. Not occasional. I assume that data flows won’t be clean and simple, but messy and adaptive. I assume that state changes might come from automated reasoning systems as often as from human users.

That changes how I approach infrastructure. AI transitions from a mere support function to an integrated element of the network's behavior. It becomes active in fundamental processes, including data routing, computation verification, and determining how applications react to real-world stimuli.

To me, native intelligence feels different from AI as an add-on. When AI is added later, it assists the chain. When it’s native, the chain expects it. The architecture is shaped around ongoing computation and adaptive logic, not just transaction batching.

It’s a small shift in framing. But underneath, it changes design priorities in a steady way.

How I See Vanar’s AI-First Approach

When I look at Vanar, I see a project trying to build with that assumption from the start.

Instead of presenting AI as a plugin, Vanar positions intelligence as part of its core stack. The architecture emphasizes data-driven applications, modular components, and infrastructure that can support dynamic, AI-assisted processes. The focus isn’t just on moving transactions quickly. It’s on allowing machine logic to interact with the chain without constant friction.

That design choice matters if AI-driven apps become more common. Gaming systems with adaptive logic. Digital identity frameworks that evolve based on behavior. Tokenized real-world data streams that update frequently. These use cases demand more than static execution.

What gives this positioning more weight, in my view, is that Vanar has live products running. There are applications in gaming infrastructure and digital identity already operating within its ecosystem. Real usage creates pressure. It tests whether the architecture can handle sustained interaction rather than controlled demos.

Early signs suggest steady activity inside its ecosystem, though broad mainstream adoption is still developing. Like most projects in this space, it remains exposed to overall market cycles.

Where Vanry Fits in the Structure

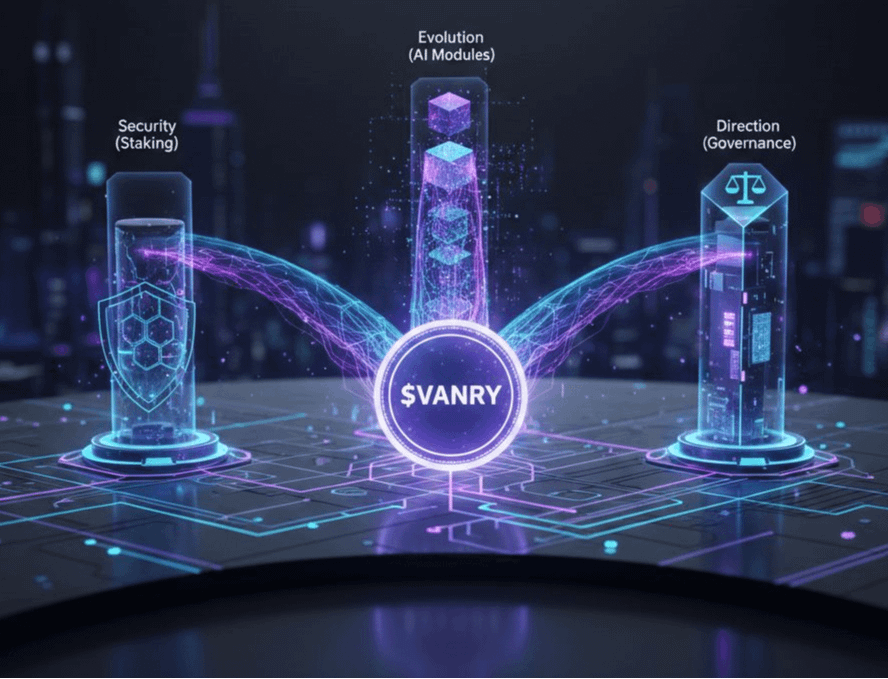

The role of VANRY makes more sense to me when I think about the network as an adaptive system.

$VANRY not positioned only as a transaction token. It supports staking, validator participation, and governance decisions. The token links economic incentives to infrastructure evolution, covering AI module upgrades and performance. Staking improves security and validator reliability. Token holders also influence the protocol's future through governance. This framework aligns the AI foundation with community incentives, preventing centralized control.

Of course, the token’s market value fluctuates daily like any digital asset. Volatility remains part of the equation. Even if the architecture is thoughtful, price behavior is influenced by broader crypto conditions.

The Risks I Keep in Mind

I don’t see AI-first design as automatically safer or stronger. It introduces complexity. More moving components mean more potential failure points. Verifying AI-generated outputs on-chain can be technically demanding, especially if off-chain computation plays a large role.

There’s also adoption risk. Developers are comfortable with established ecosystems. If AI tools can run well enough on older chains, some teams may not feel urgency to migrate.

Scalability is another open question in my mind. AI workloads can be heavy and continuous. If usage grows significantly, the infrastructure will need to sustain that pressure without raising costs or reducing performance. That is not trivial.

Regulation adds another layer of uncertainty. AI and digital assets are both evolving fields, and policy decisions could influence how integrated systems operate across different regions.

Infrastructure Thinking: A Gradual Evolution

This represents a gradual, broader transformation rather than an abrupt change. Initially, blockchains were established as systems intended for routine, human-initiated transactions. AI introduces machine-driven activity that never really sleeps.

If that trend continues, infrastructure may need to reflect it more deeply. Projects like Vanar represent one approach to building that alignment directly into the base layer instead of layering it on top.

Whether this model becomes standard remains to be seen. But the underlying question feels steady and hard to ignore.

If intelligence is going to be constant, shouldn’t the foundation expect it?